Training Neural Networks: Activation Functions, Backpropagation, and TensorFlow Implementation

Explore how neural networks are trained with gradient descent, softmax, and backpropagation using TensorFlow. Understand activation functions and multiclass classification techniques.

12 min read

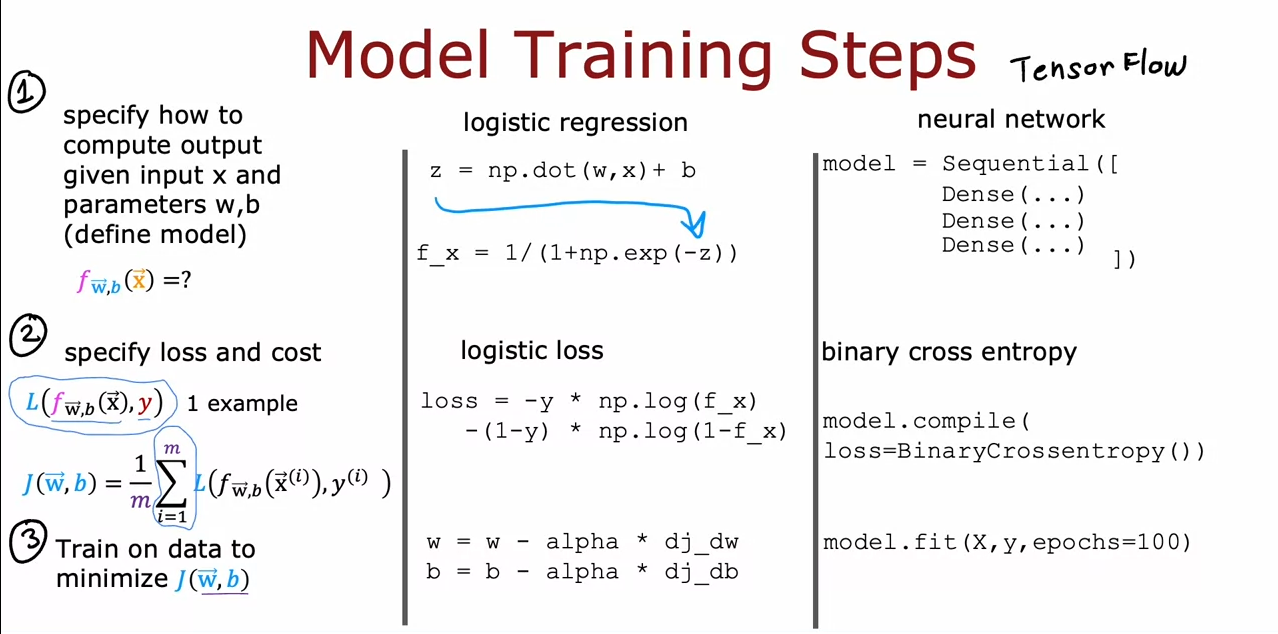

2.Neural network training

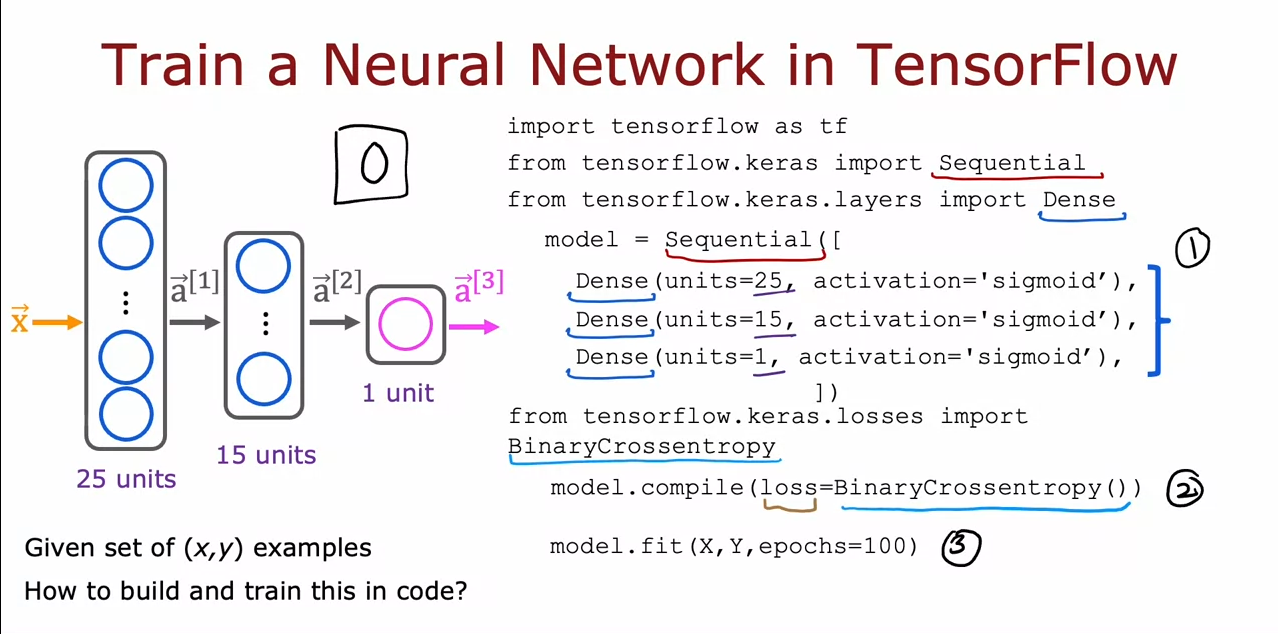

TensorFlow Implementation

Train a Neural Network in TensorFlow

Training Details

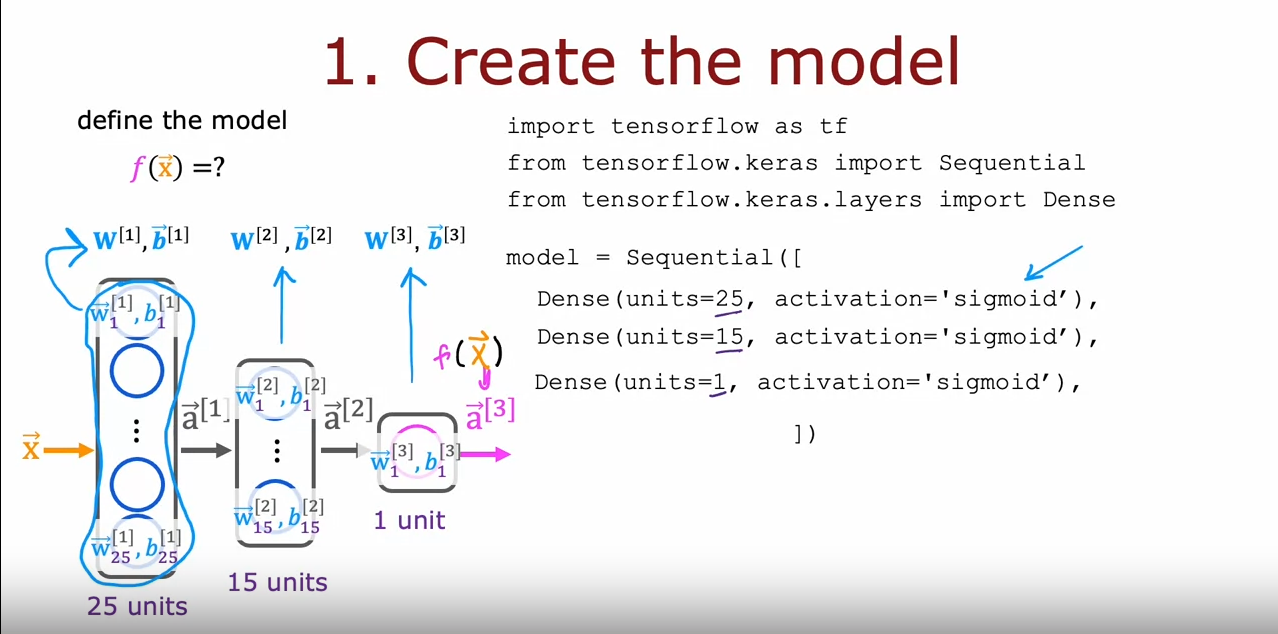

1. Create the model

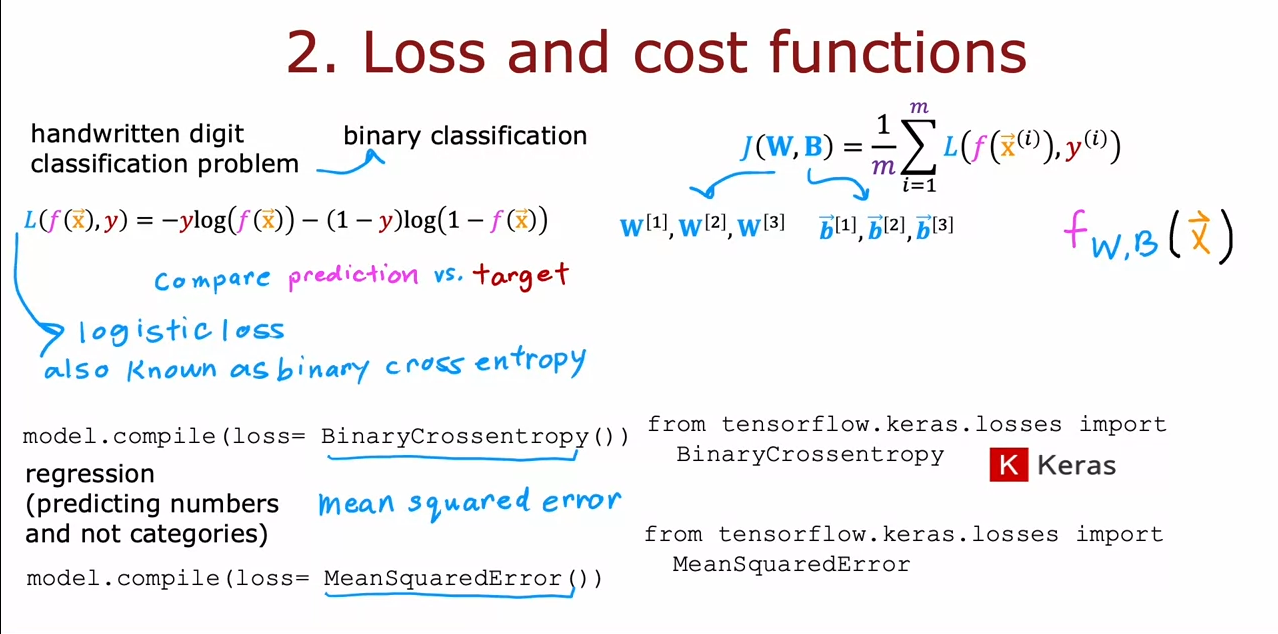

2. Loss and cost functions

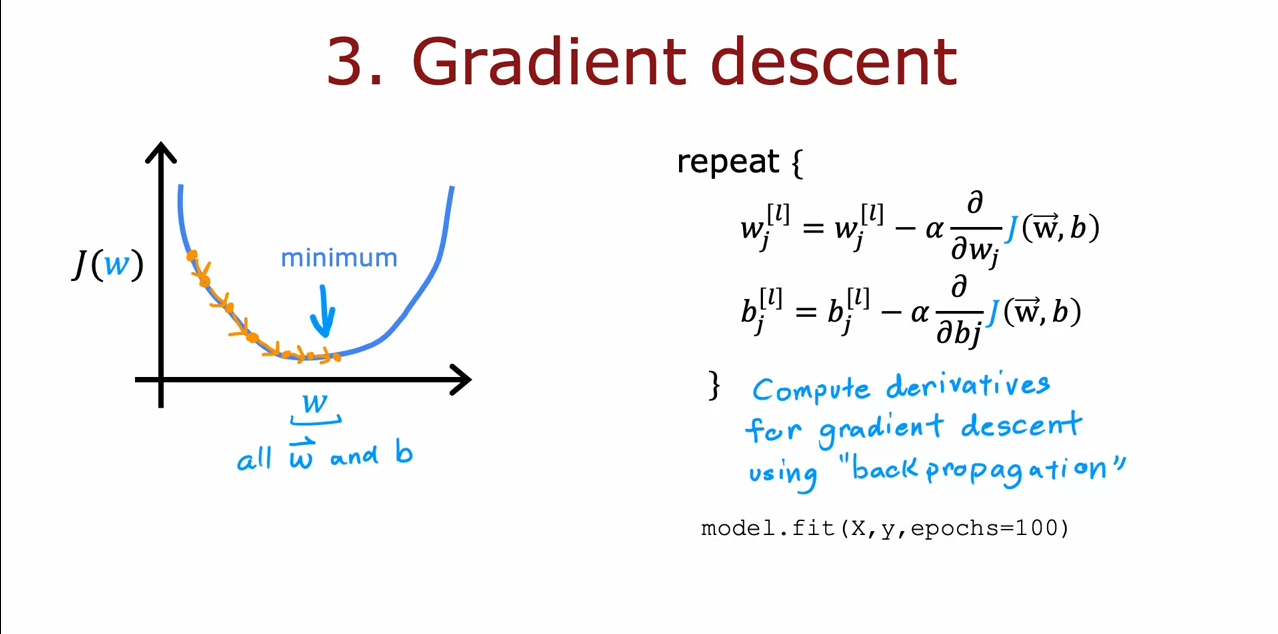

3. Gradient descent

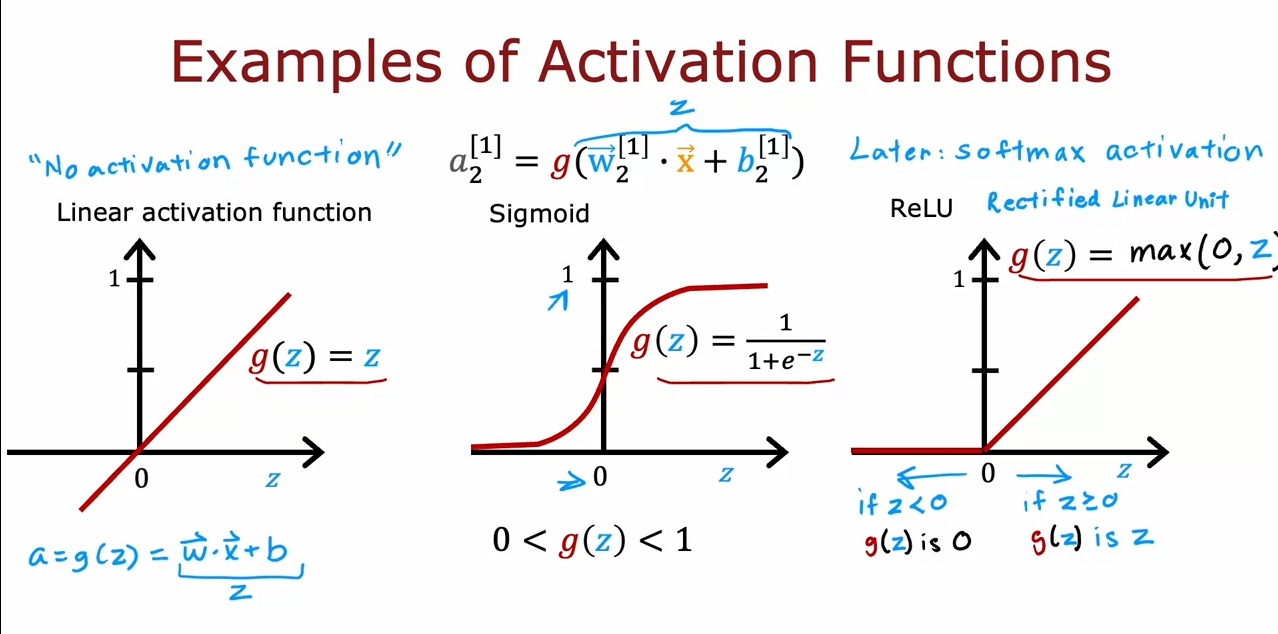

Alternatives to the sigmoid activation

ReLU (Rectified Linear Unit)

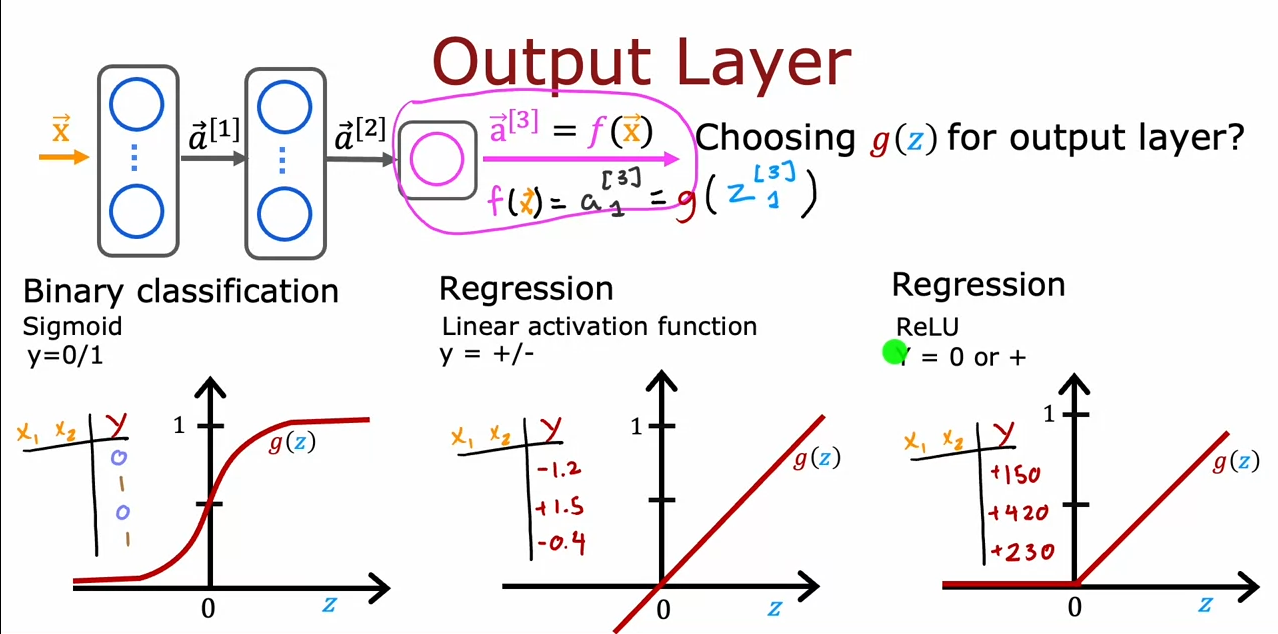

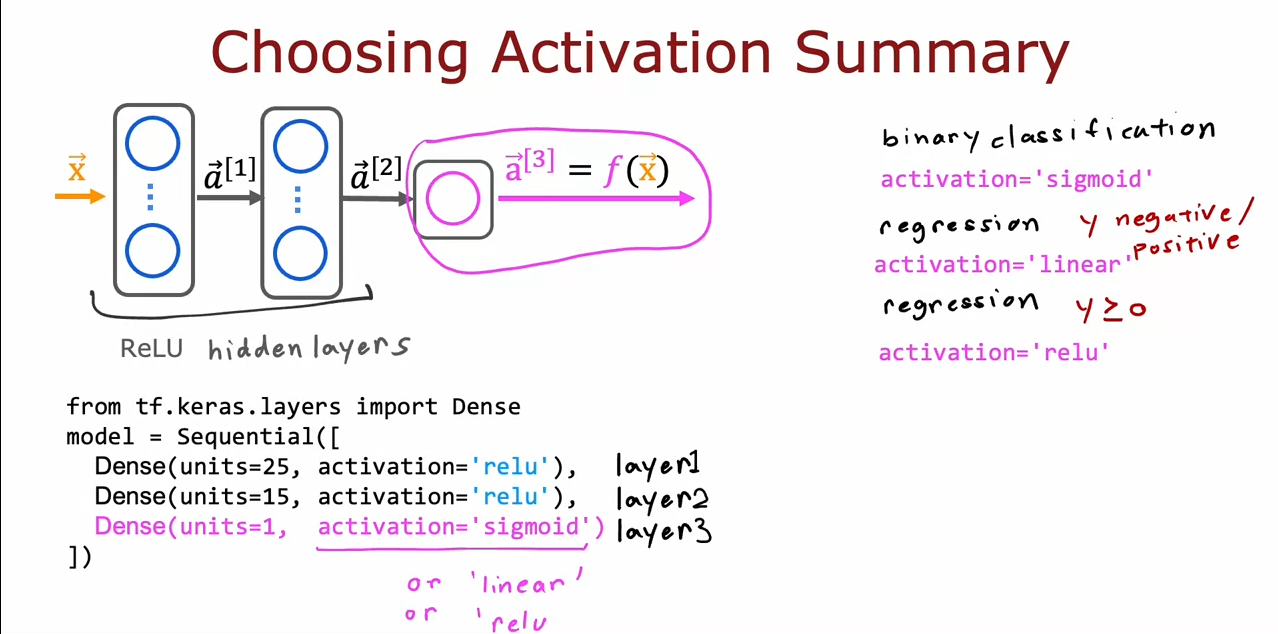

Choosing activation functions

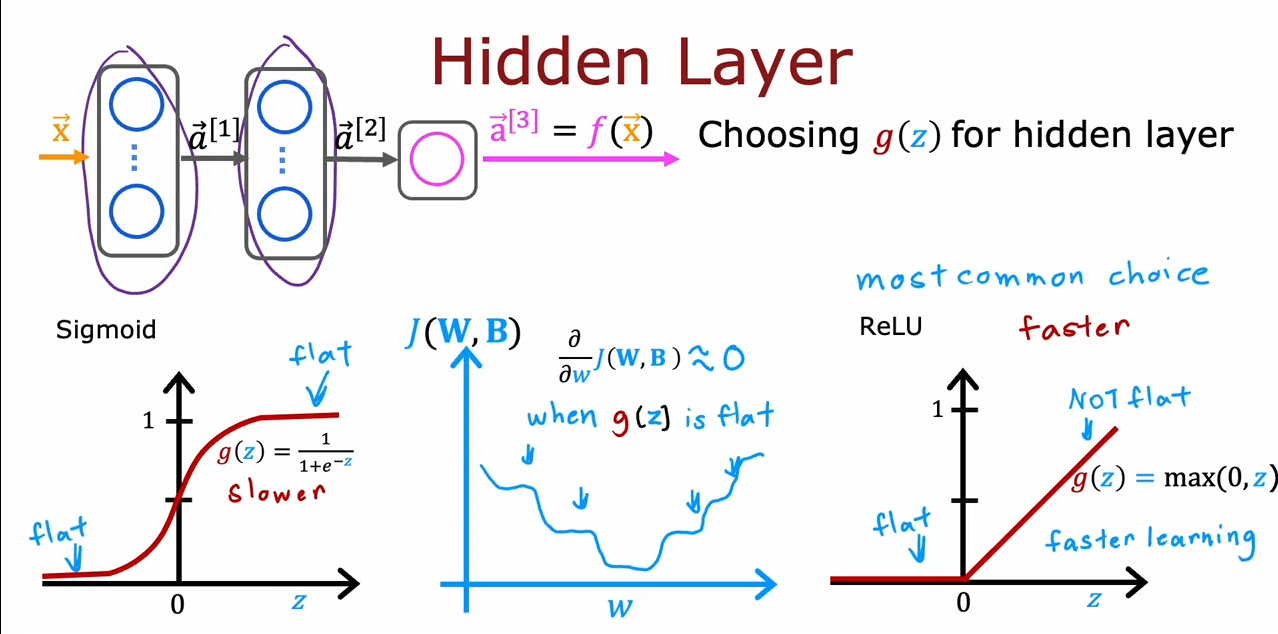

Hidden Layer

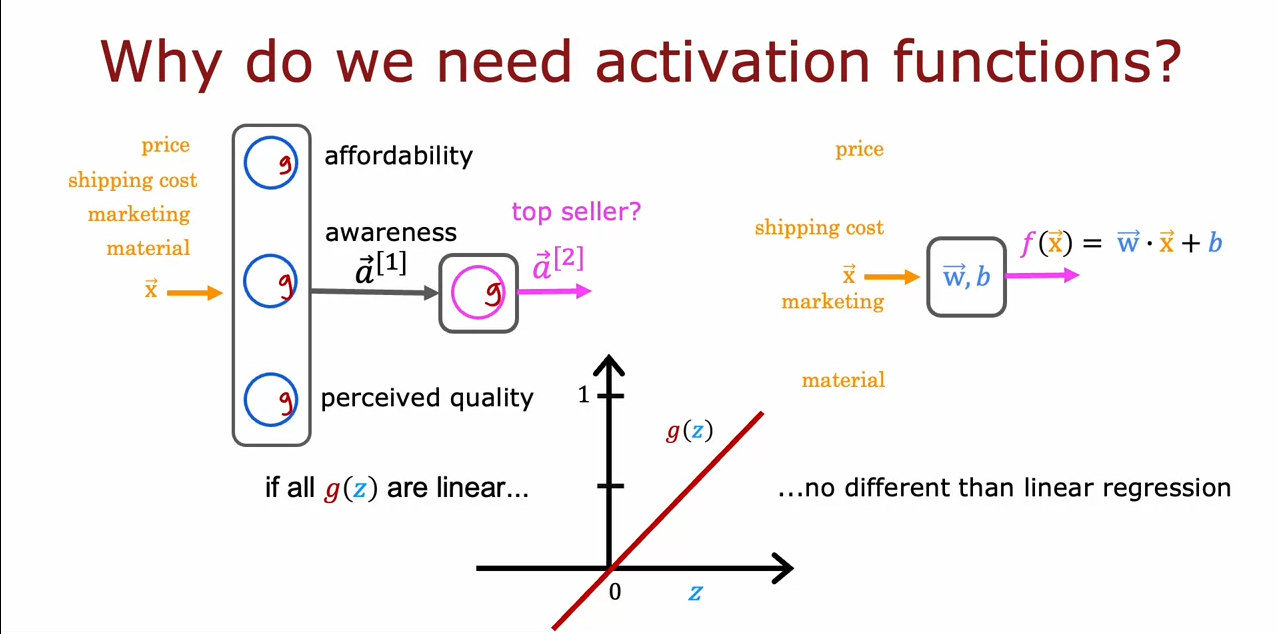

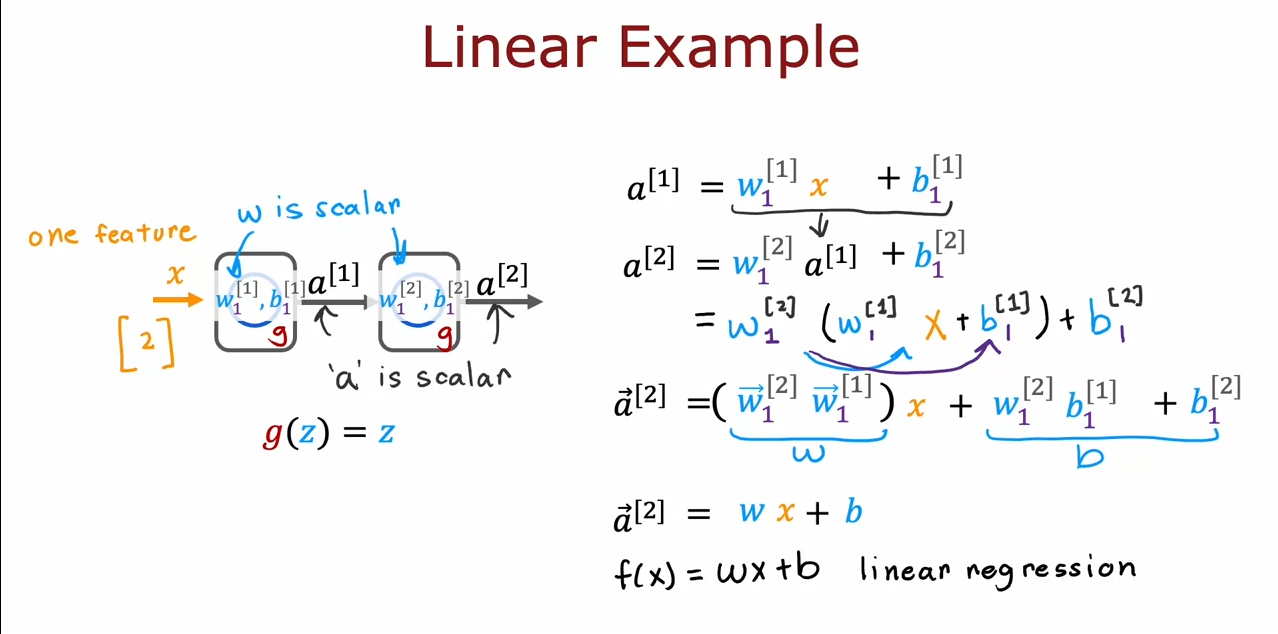

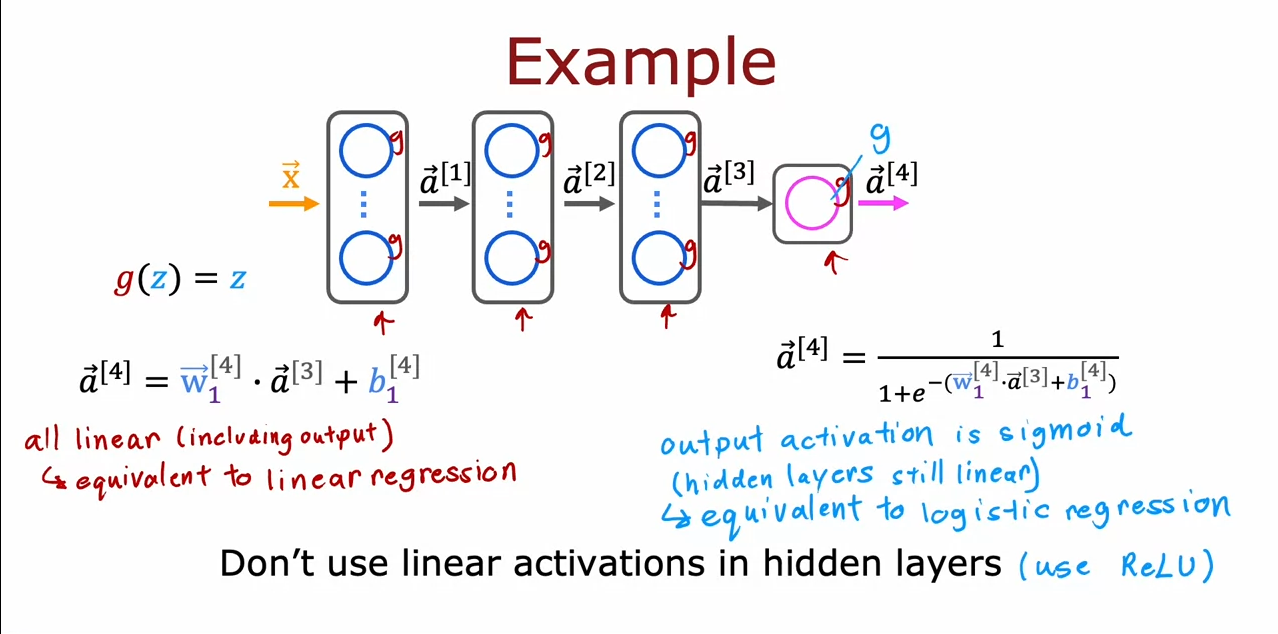

Why do we need activation functions?

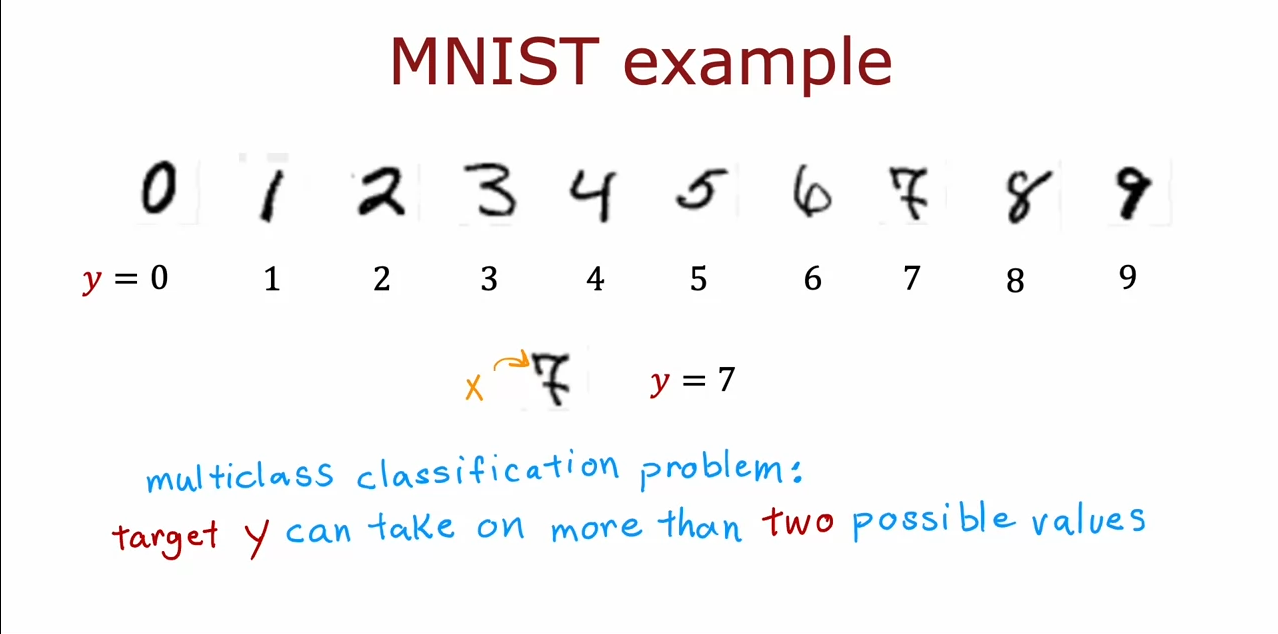

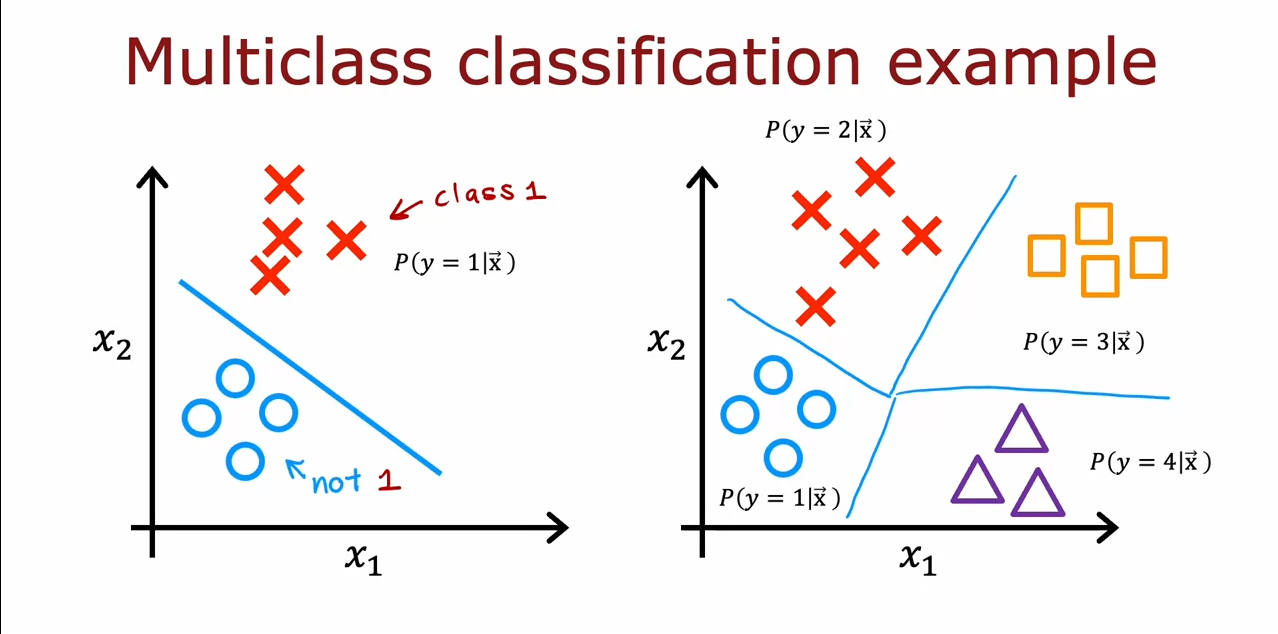

Multiclass

target y can take on more than two possible values

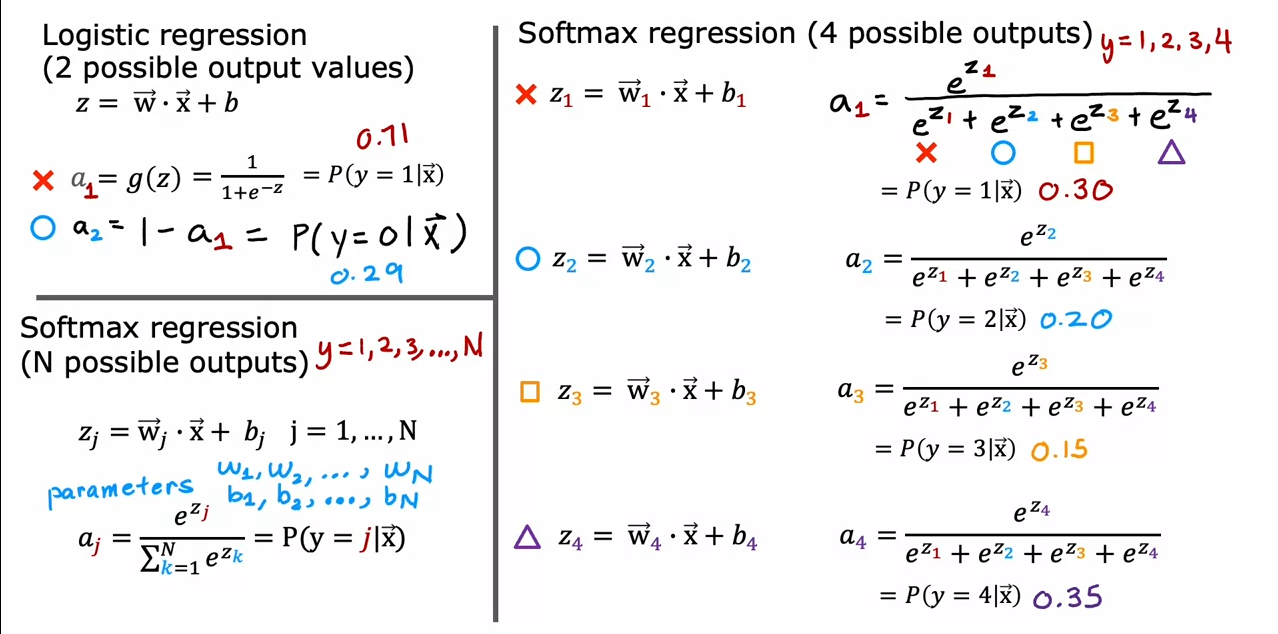

Softmax

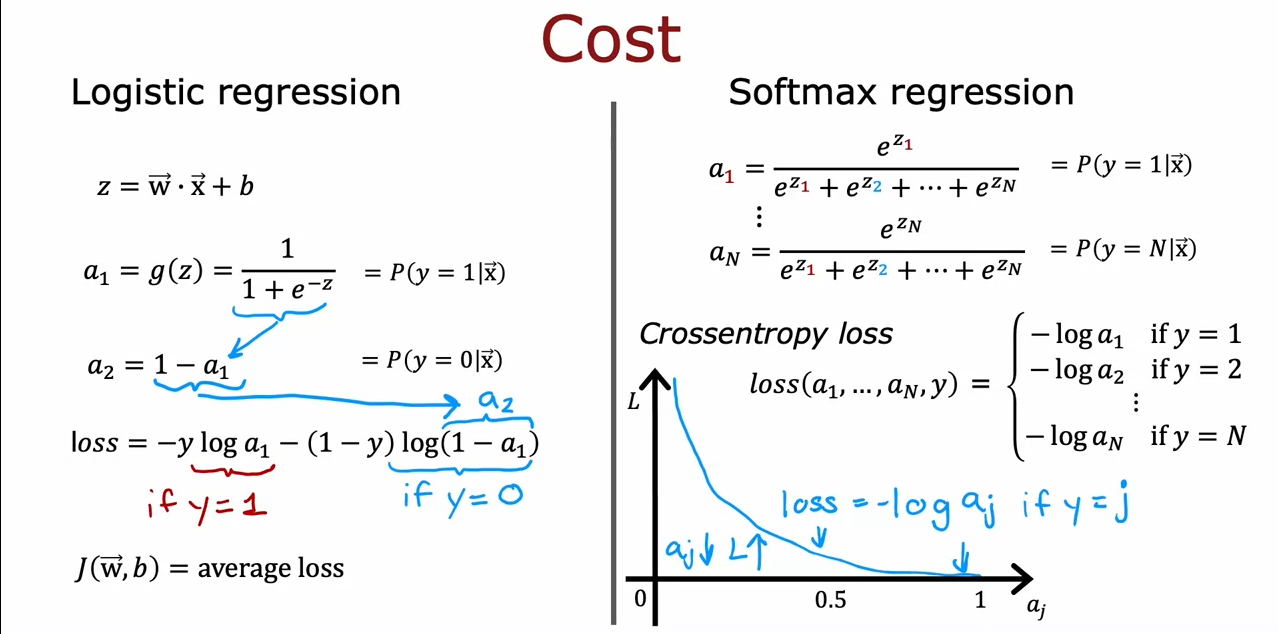

Cost

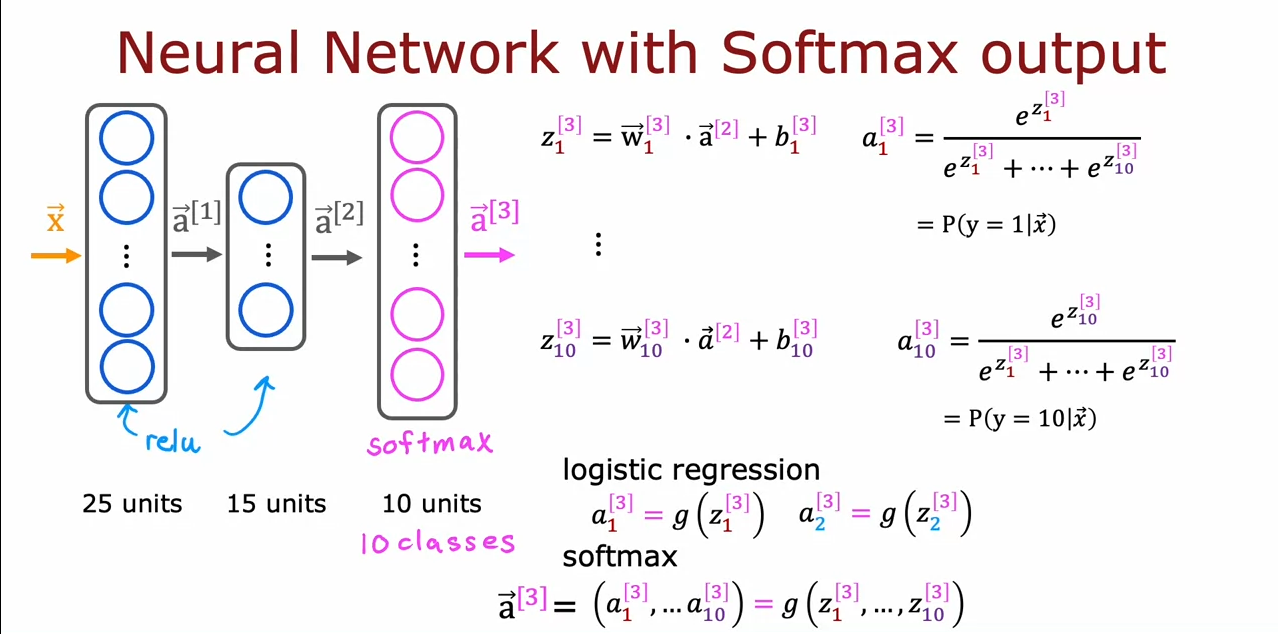

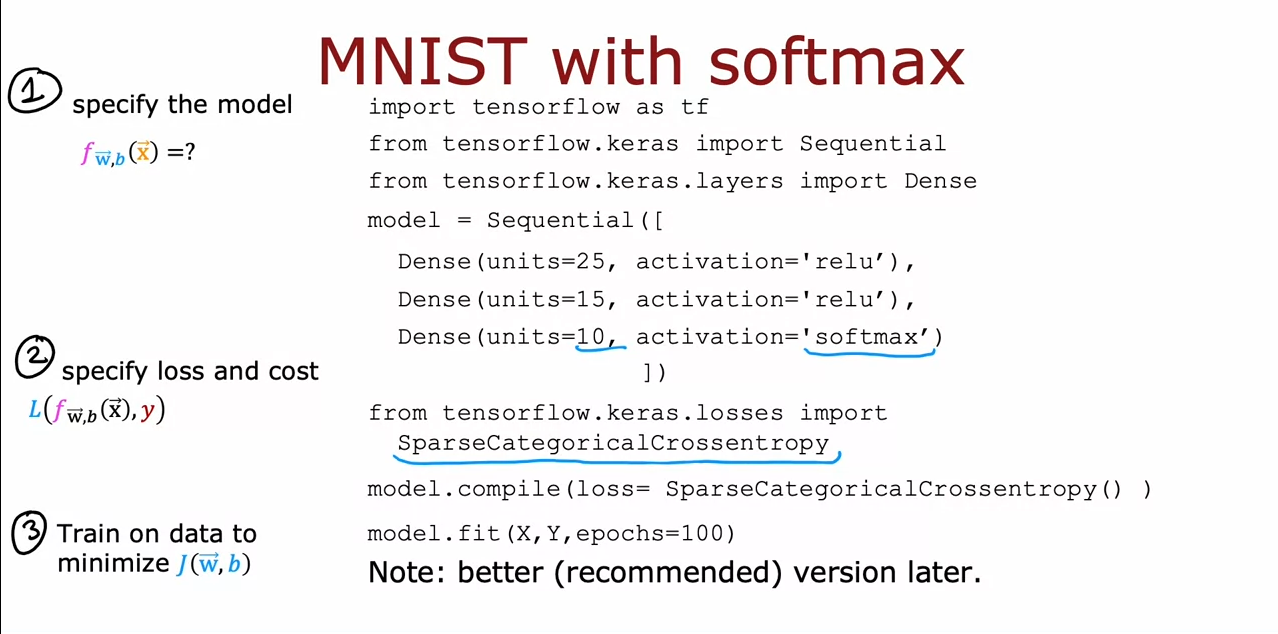

Neural network with softmax output

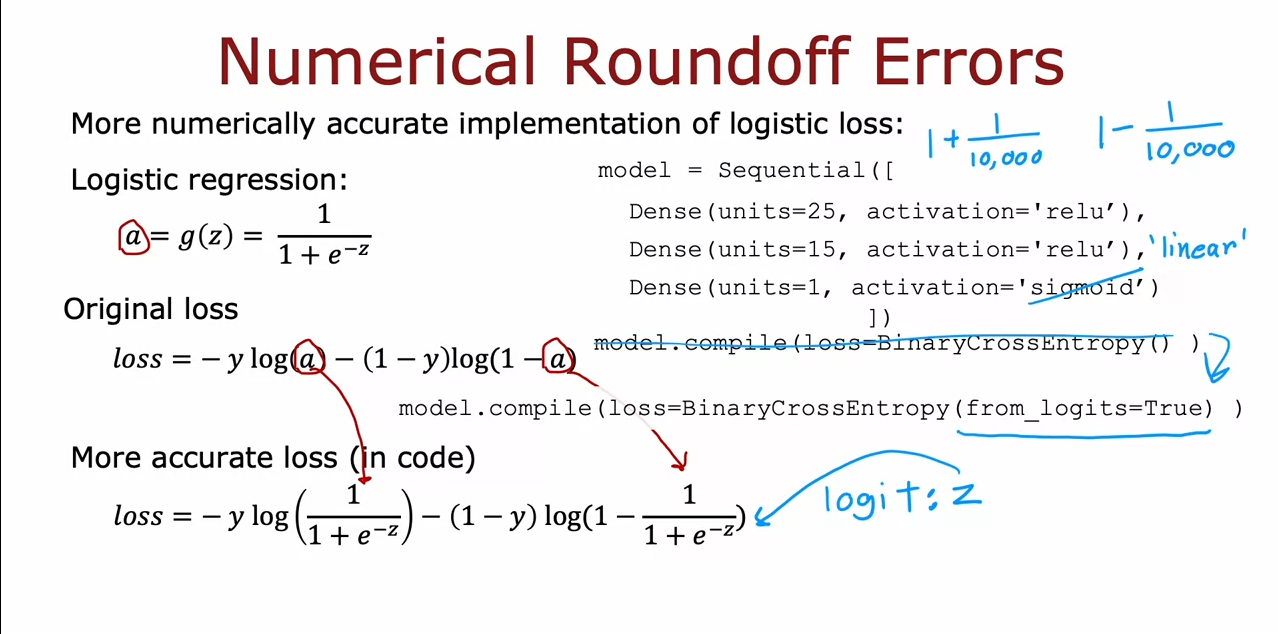

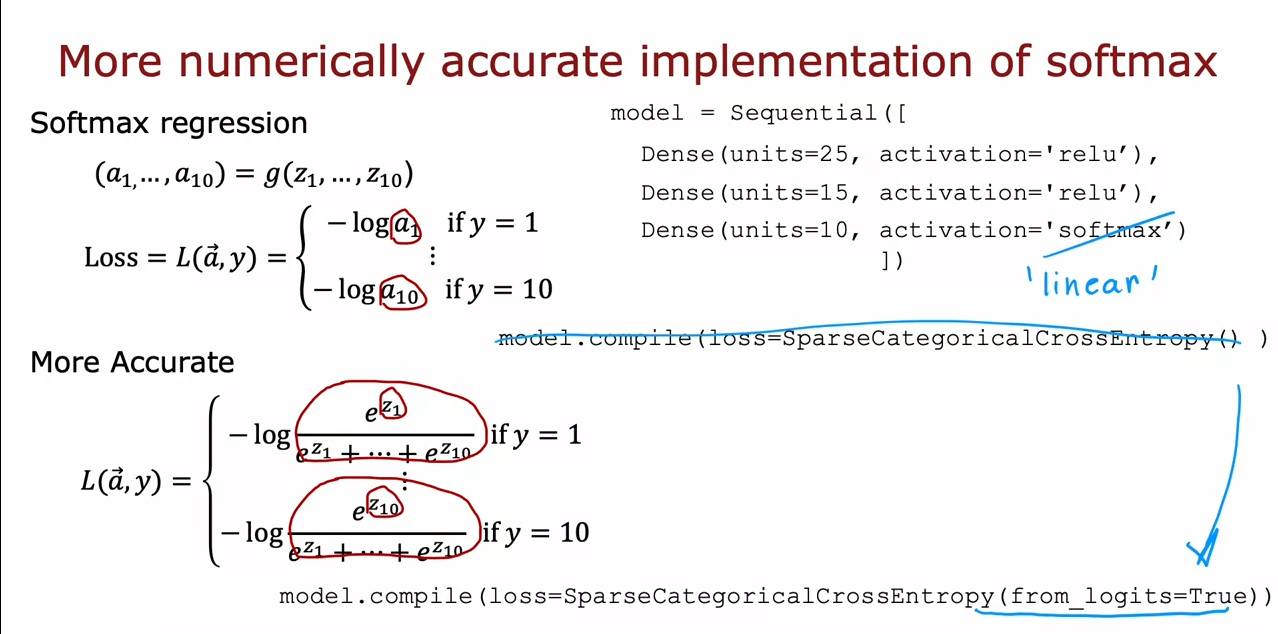

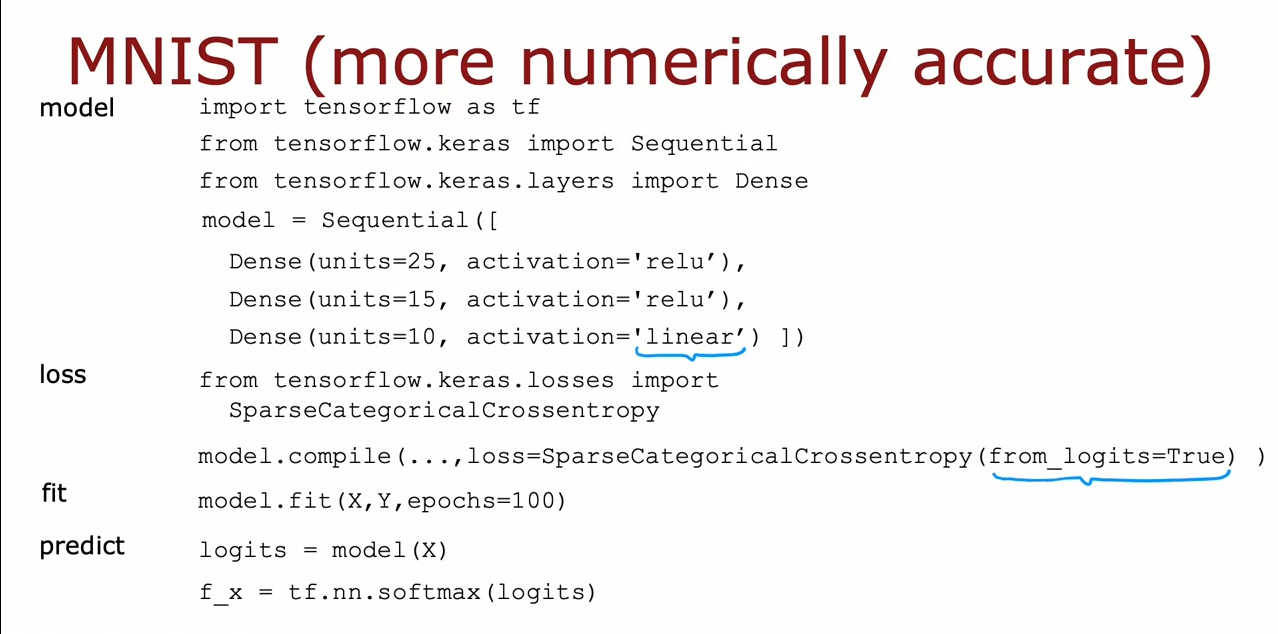

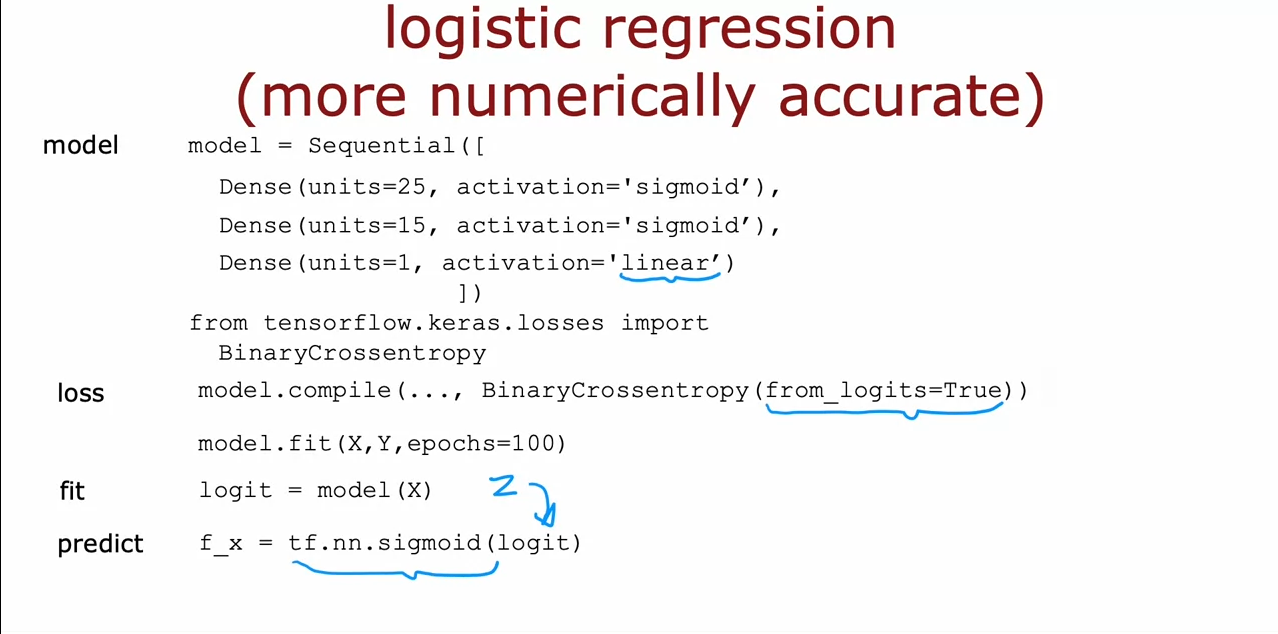

Improved implementation of softmax

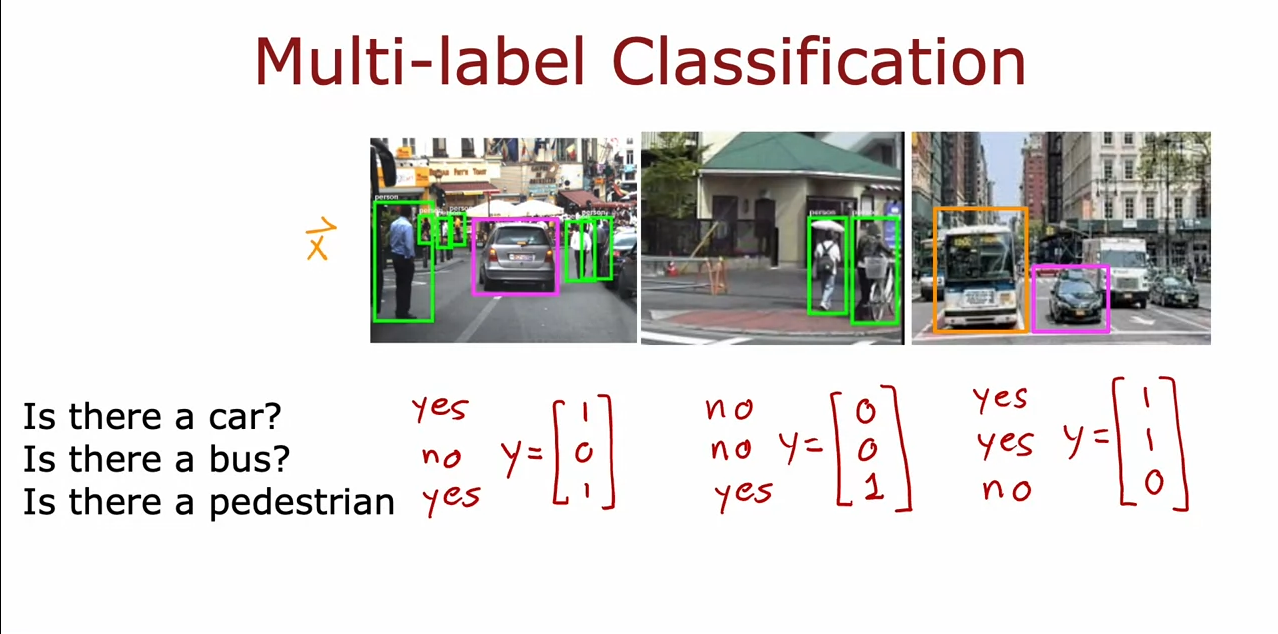

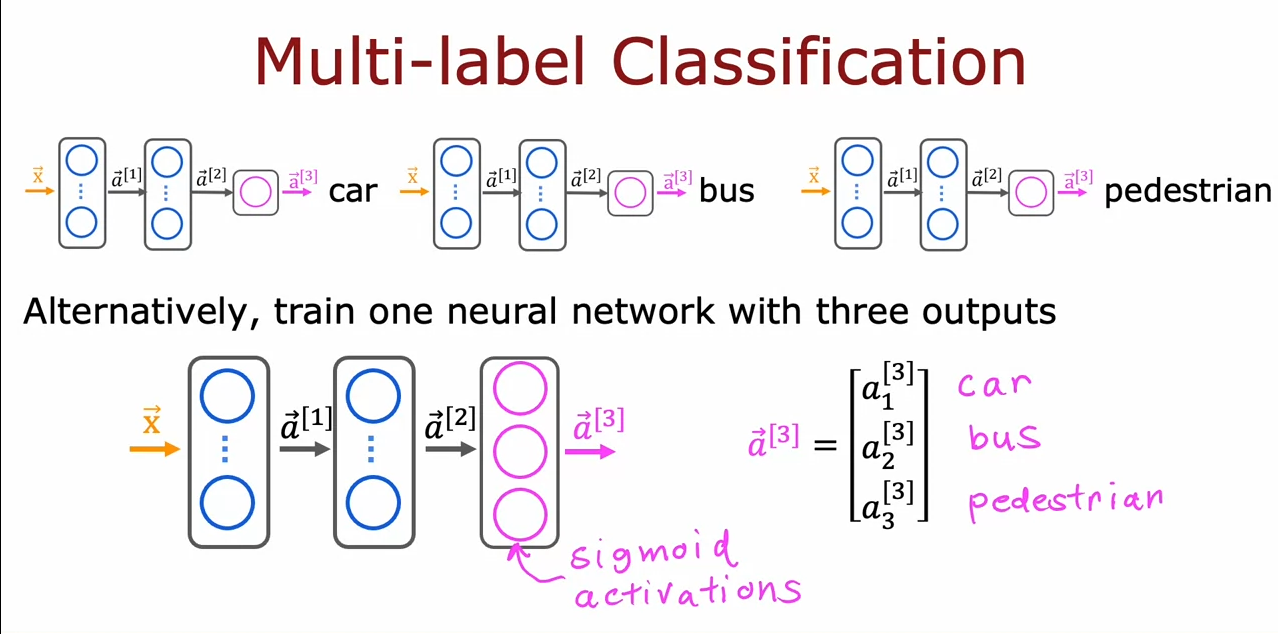

Classification with multiple outputs

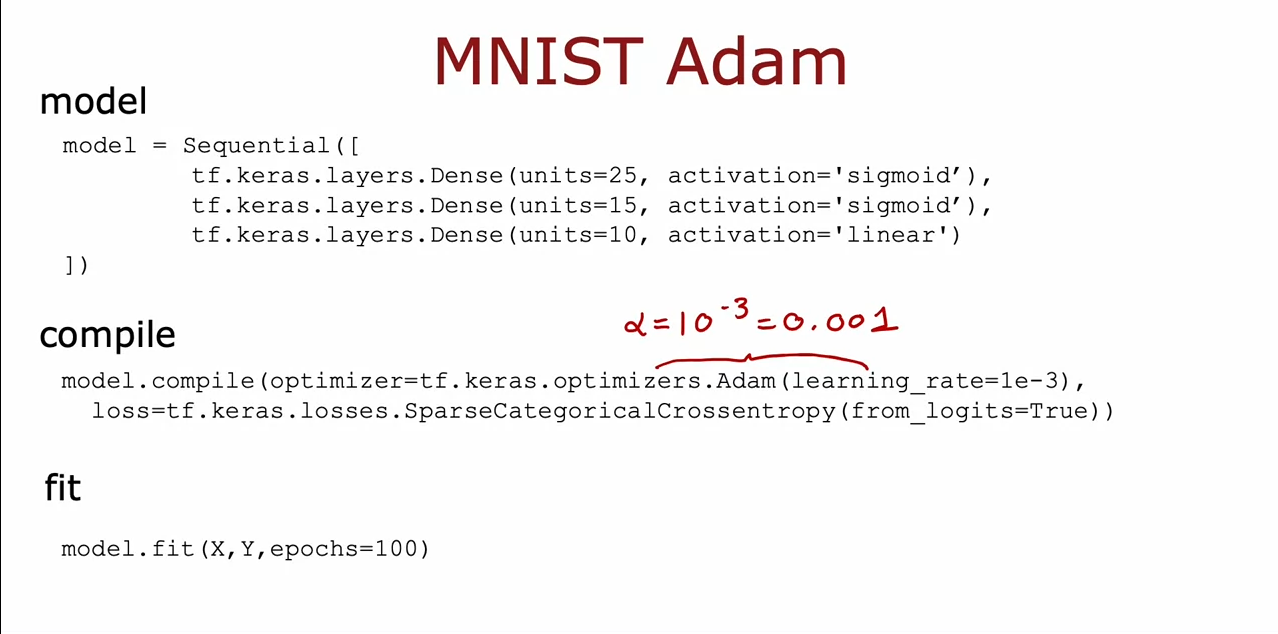

Advanced Optimization

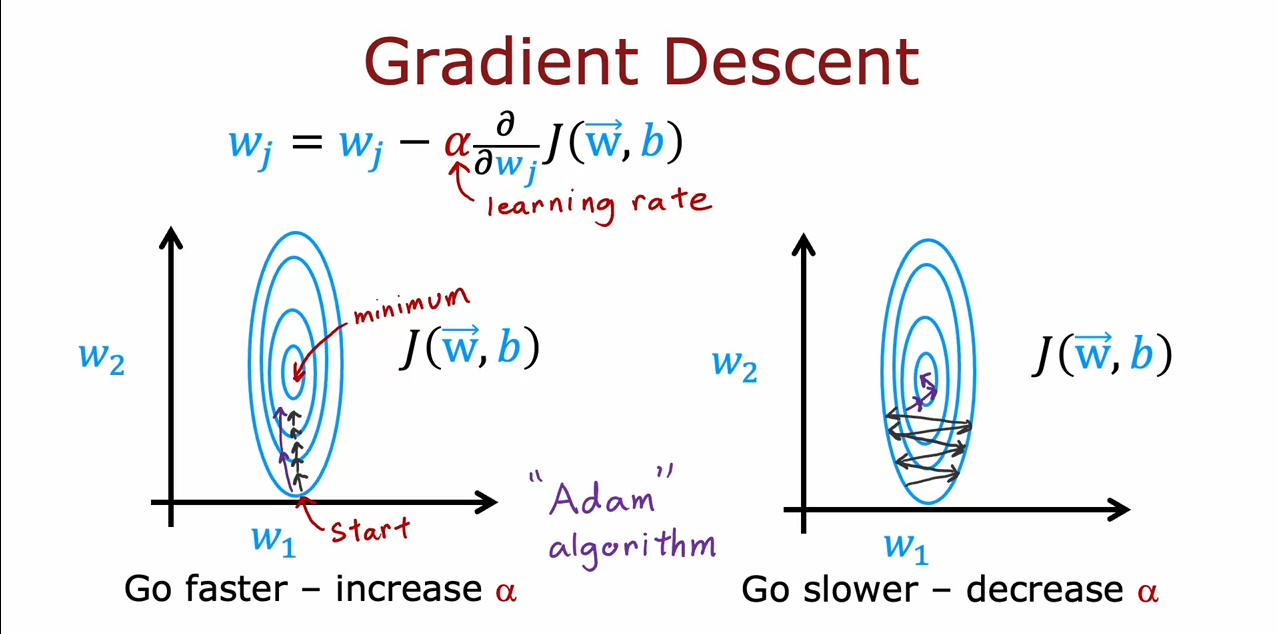

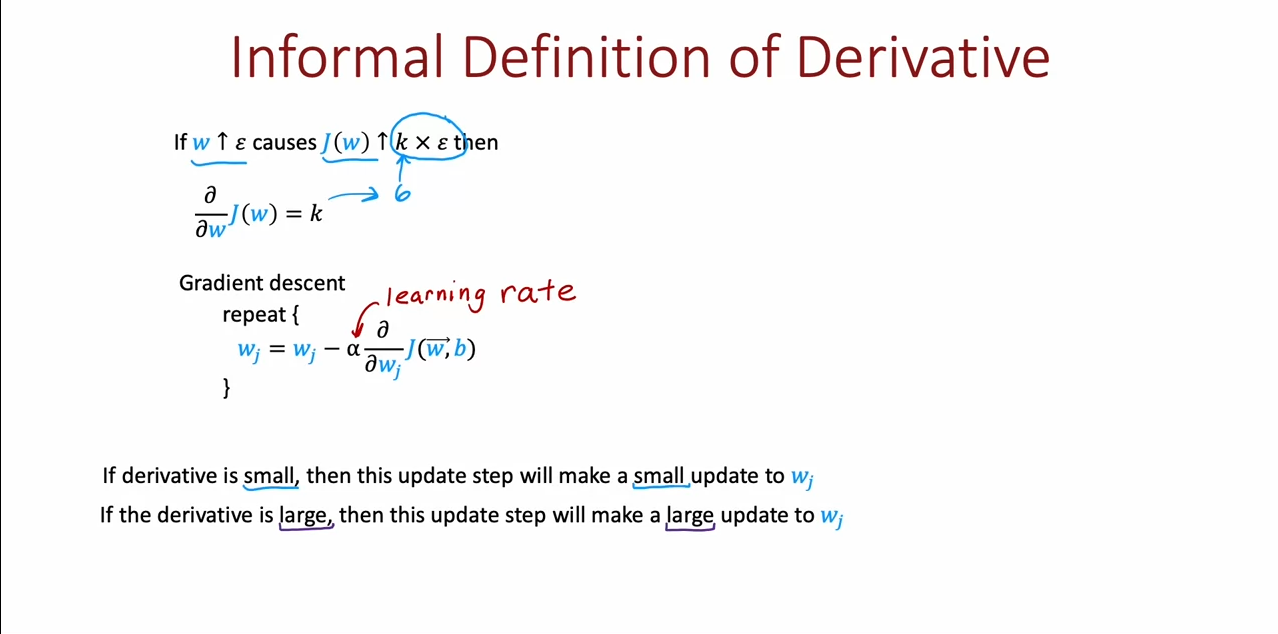

Gradient Descent

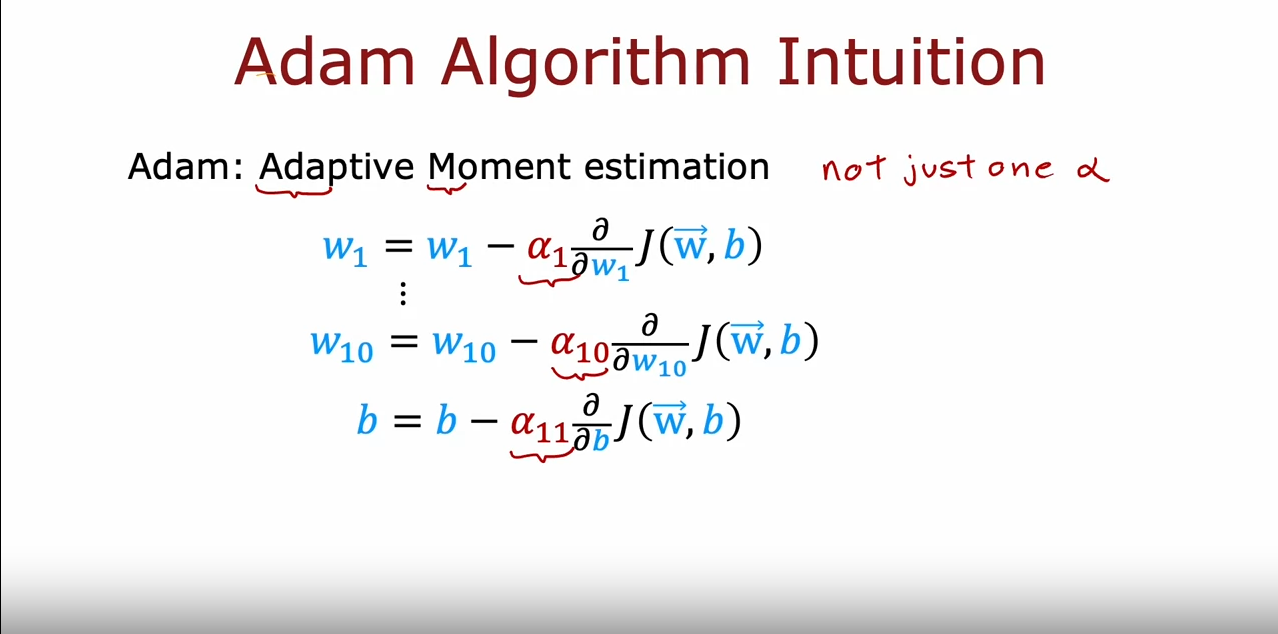

Adam Algorithm Intuition

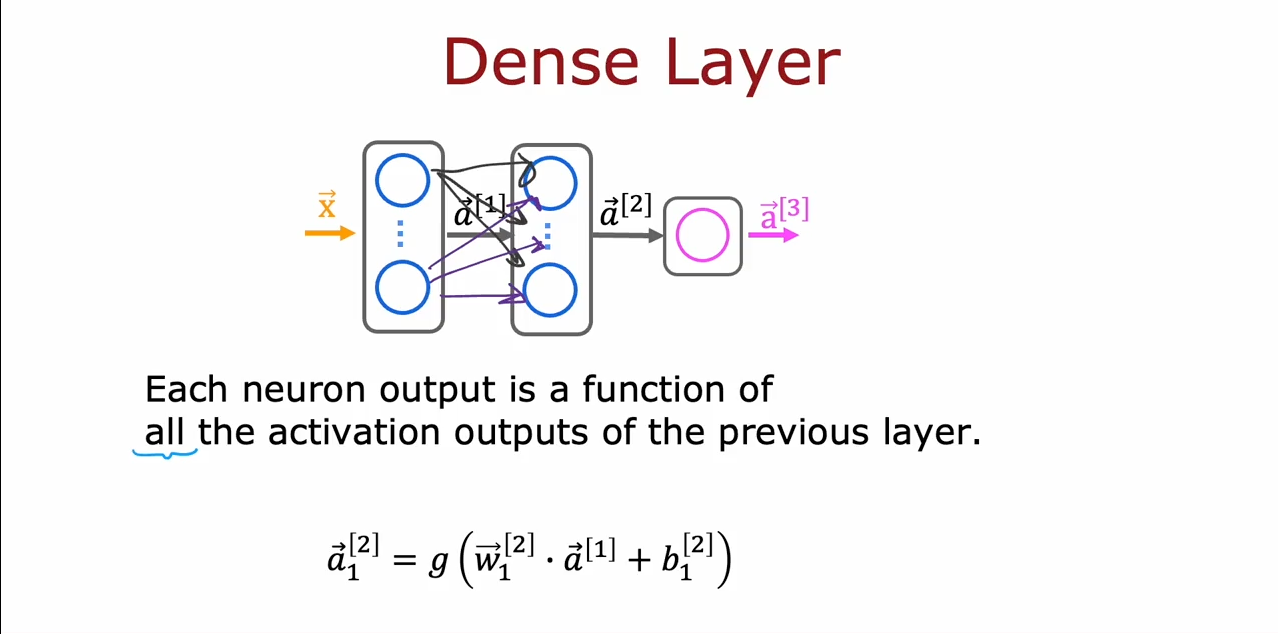

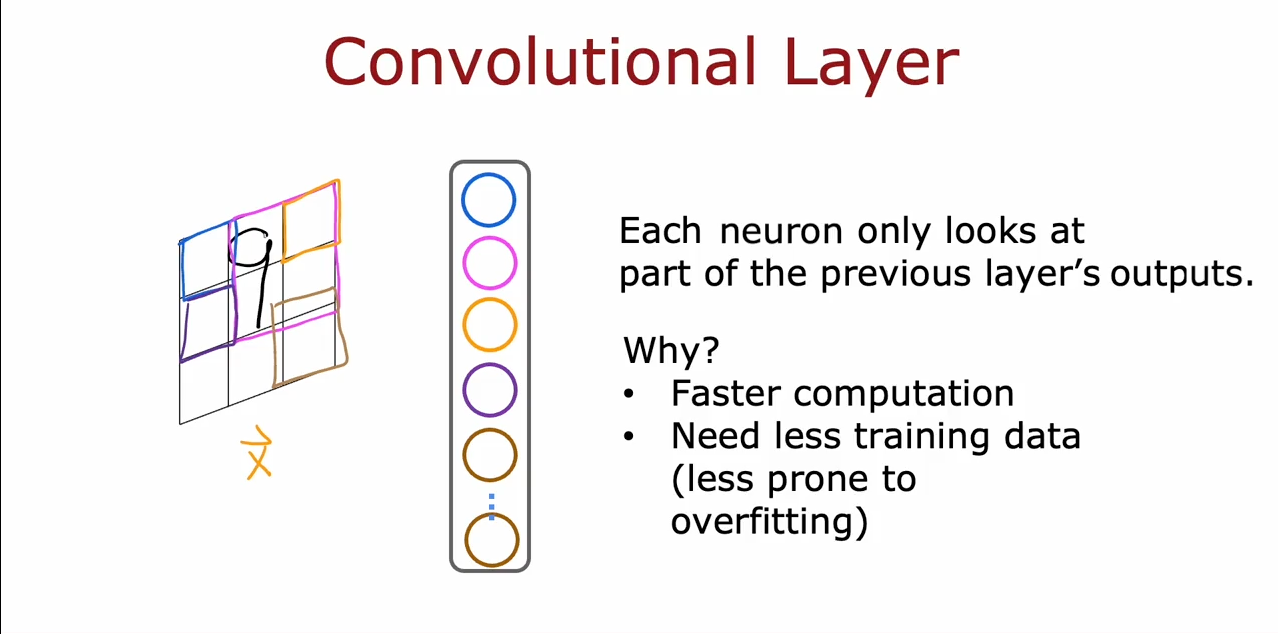

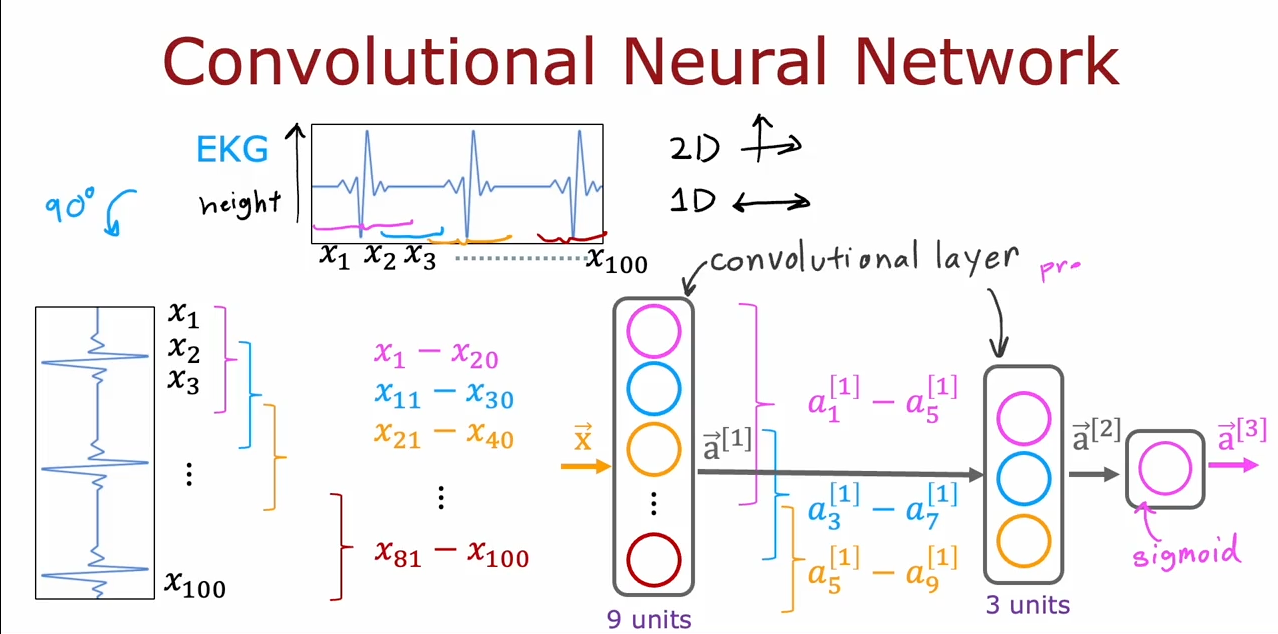

Additional Layer Types

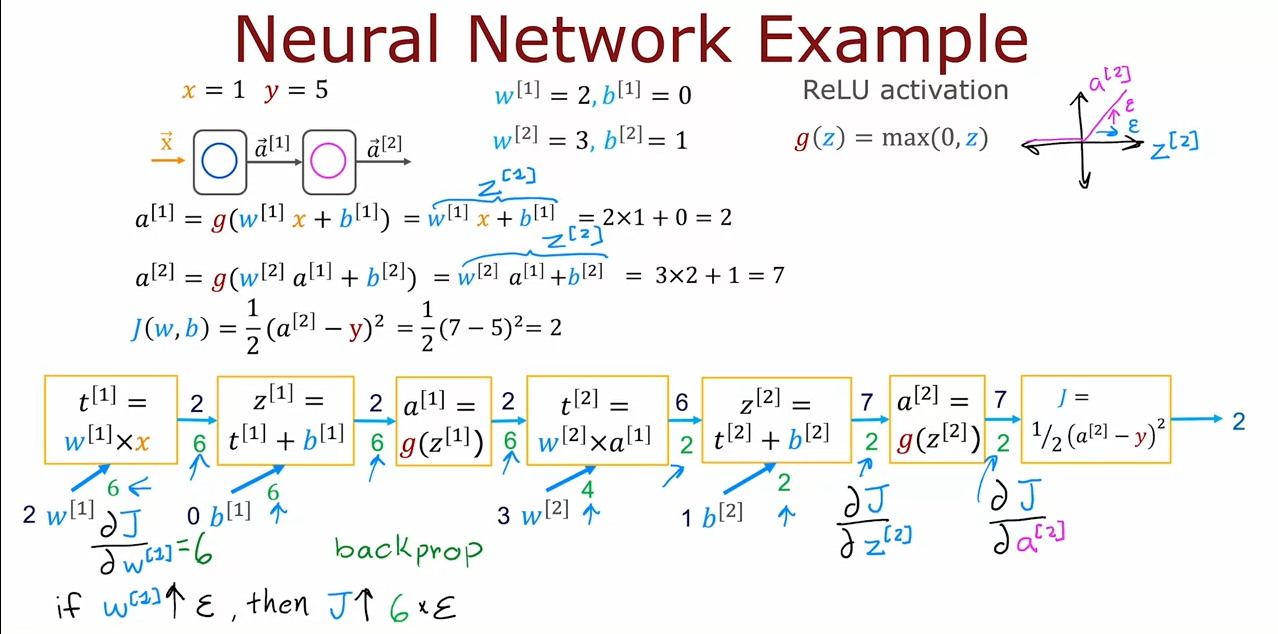

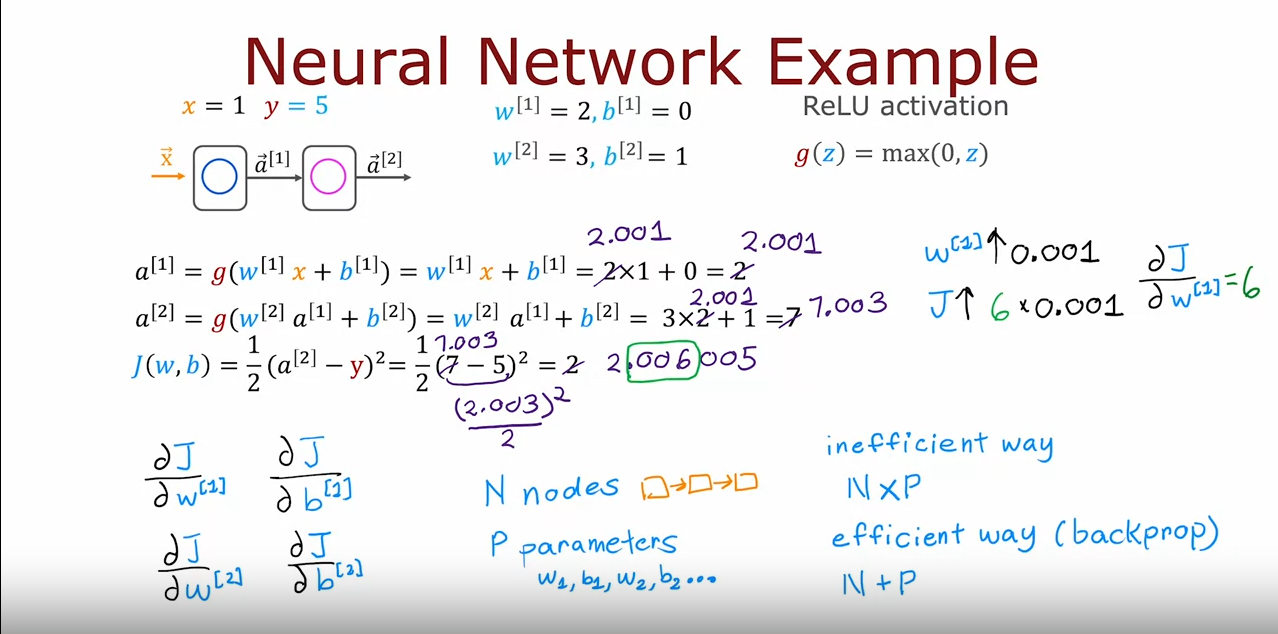

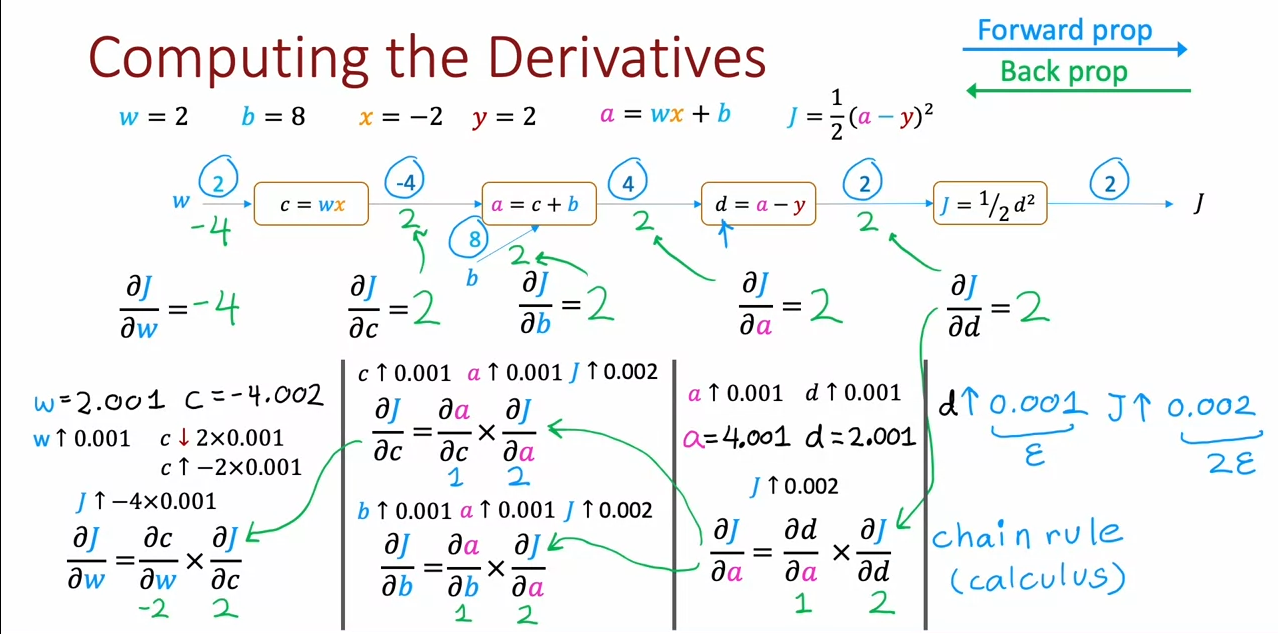

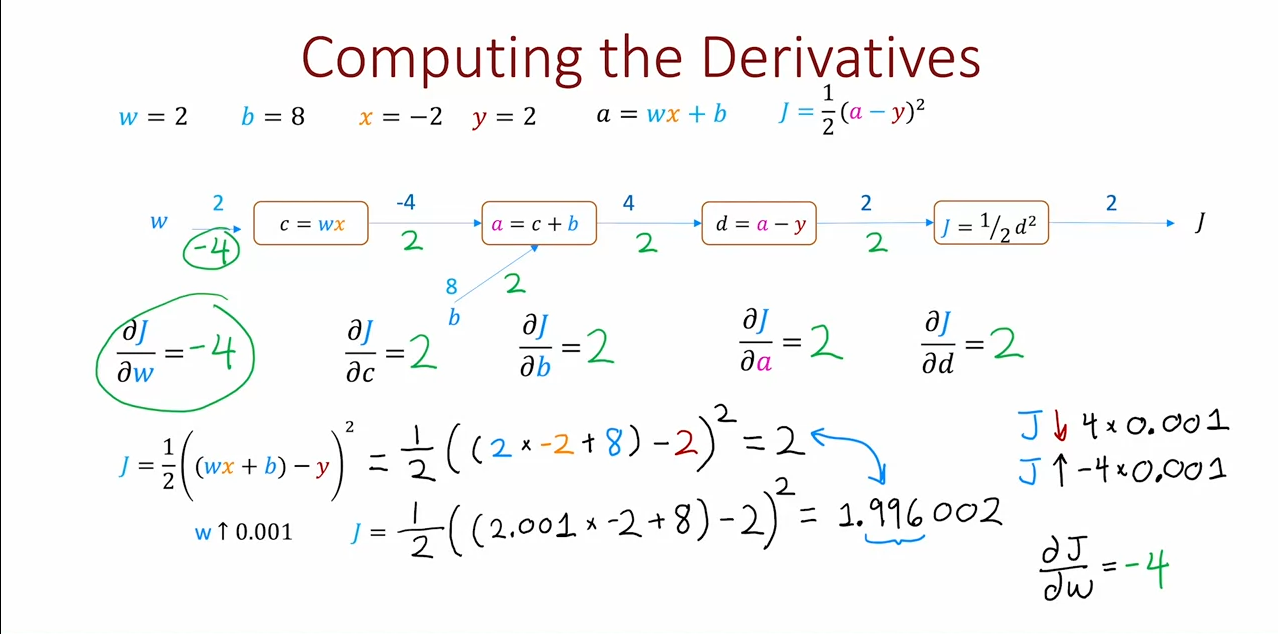

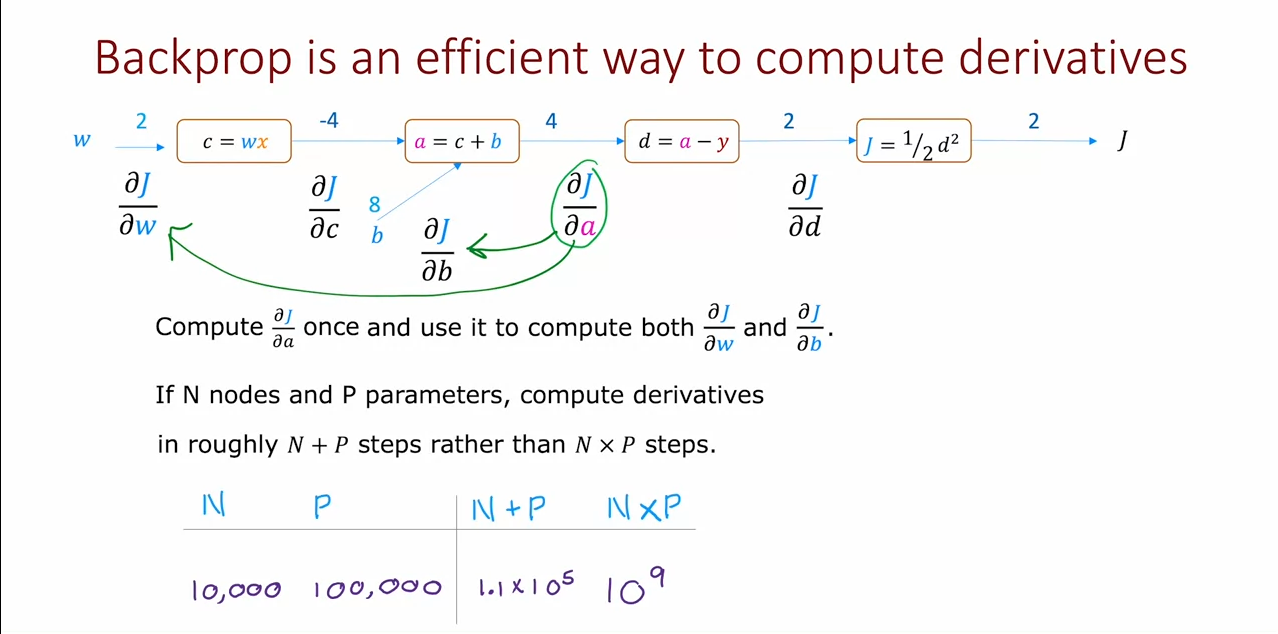

Back propagation

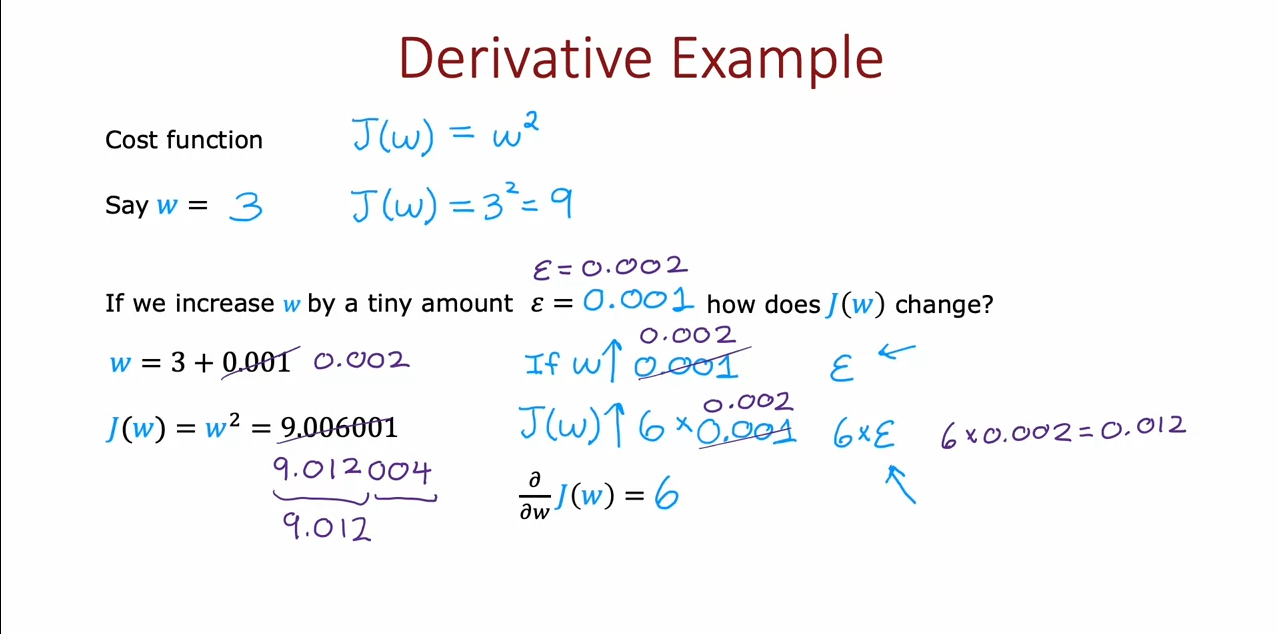

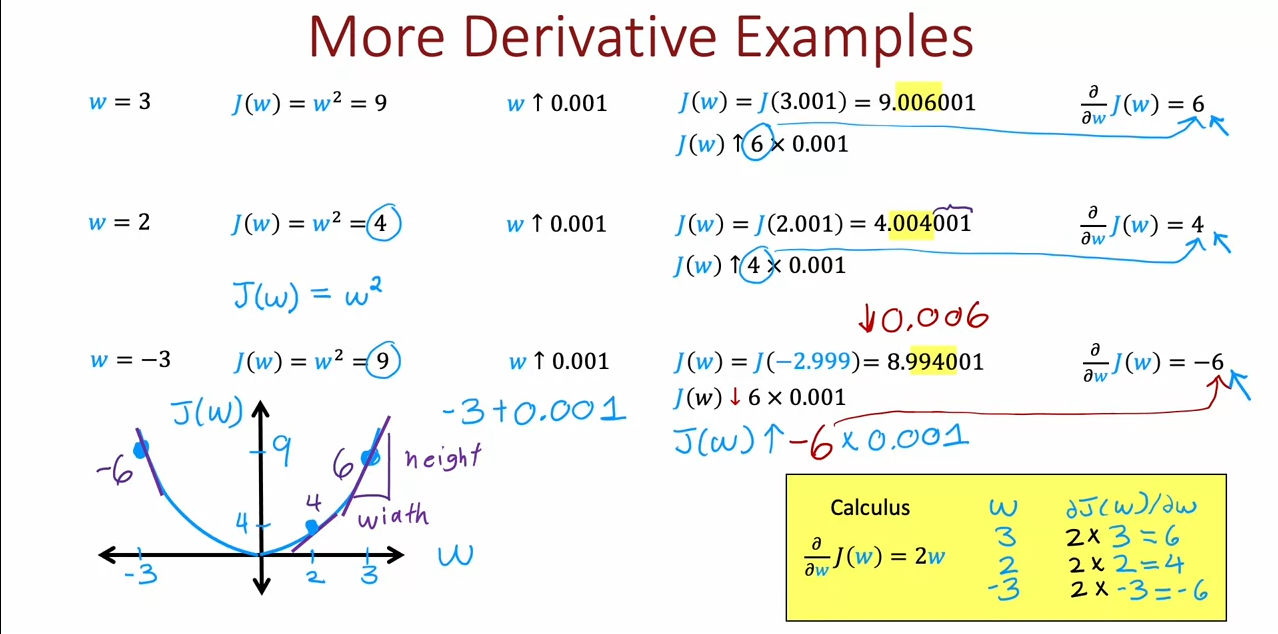

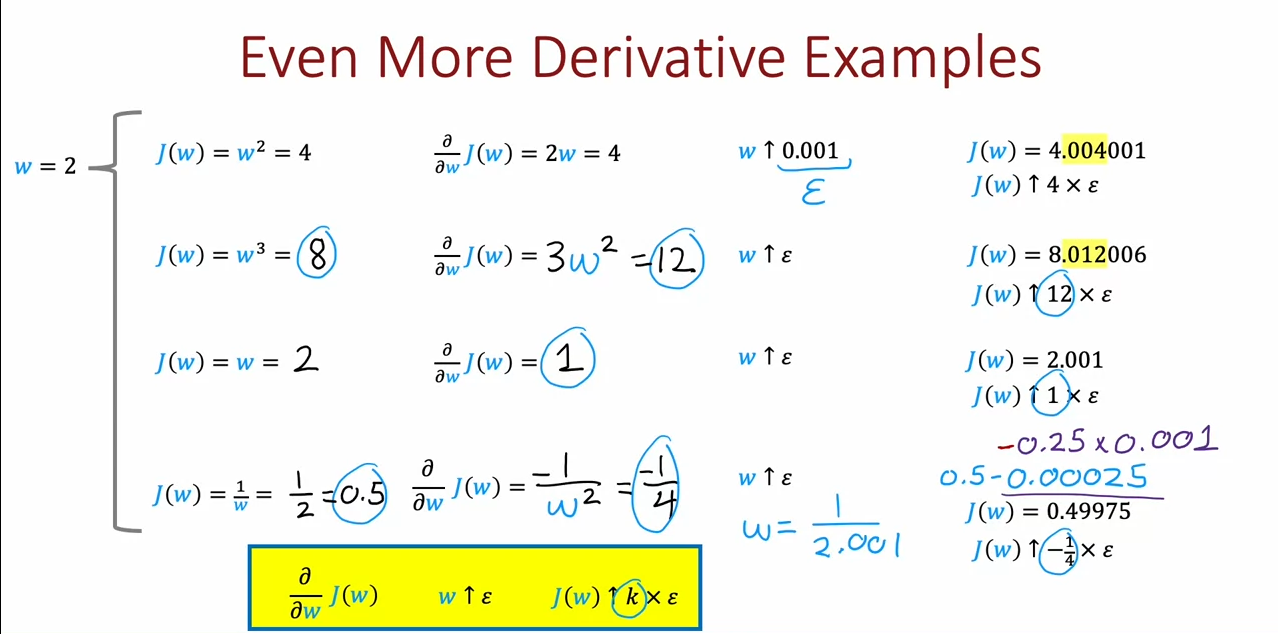

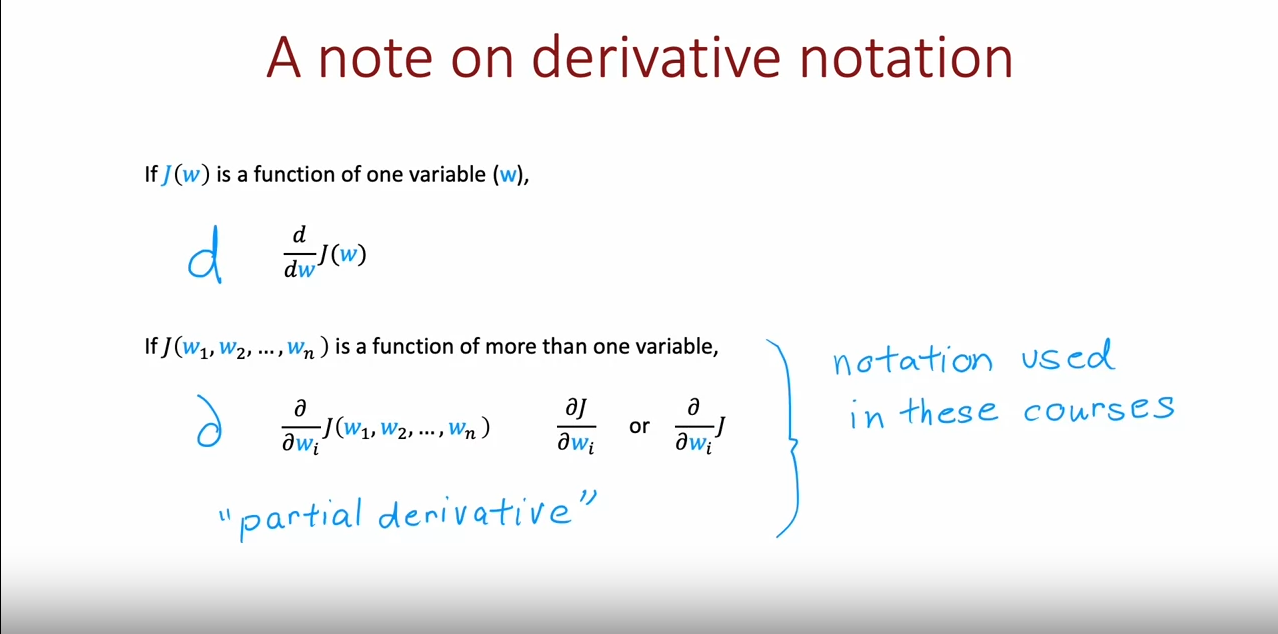

What is a derivative?

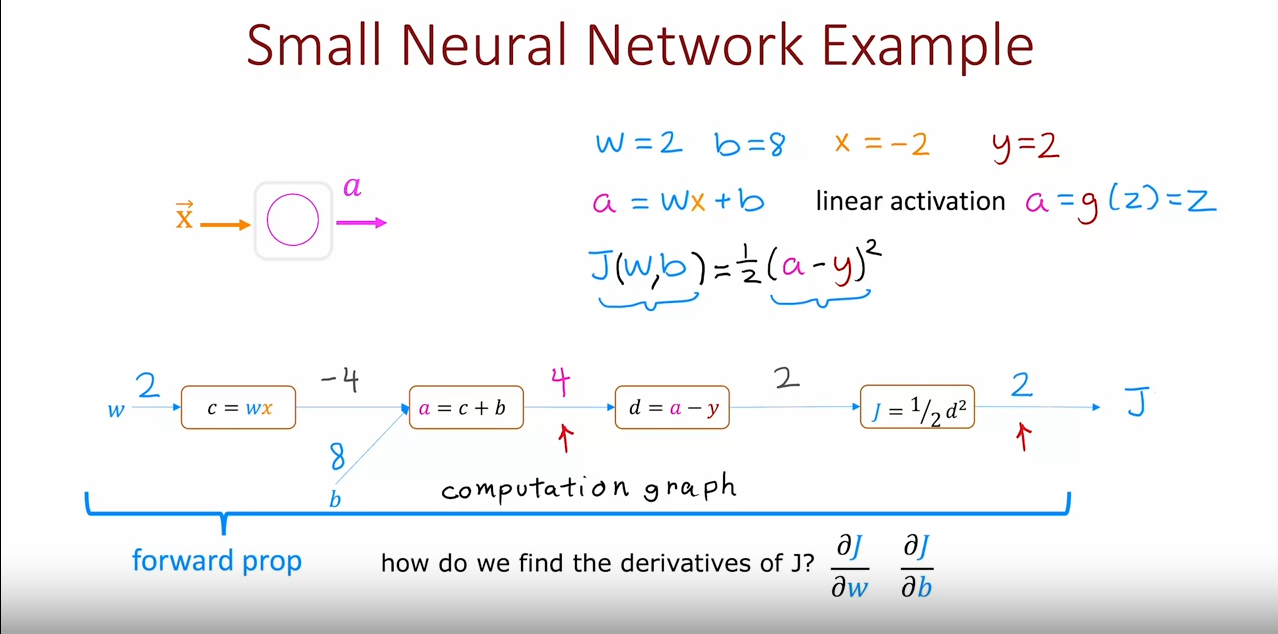

Computation graph

Larger neural network example