2.Neural network training

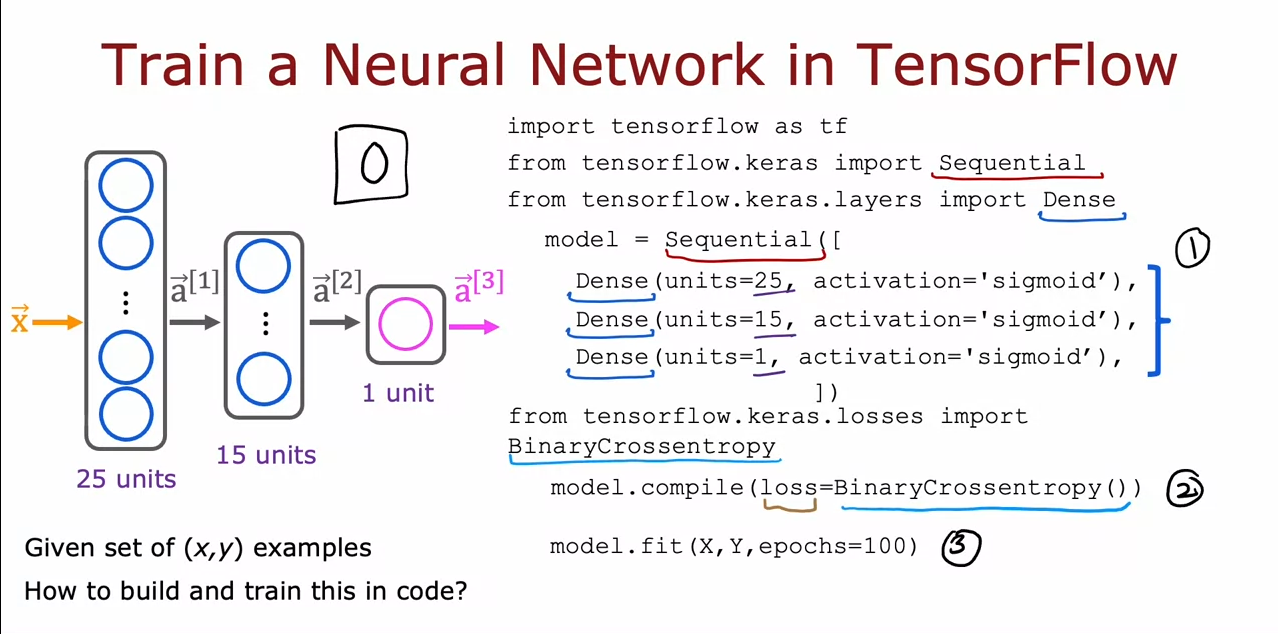

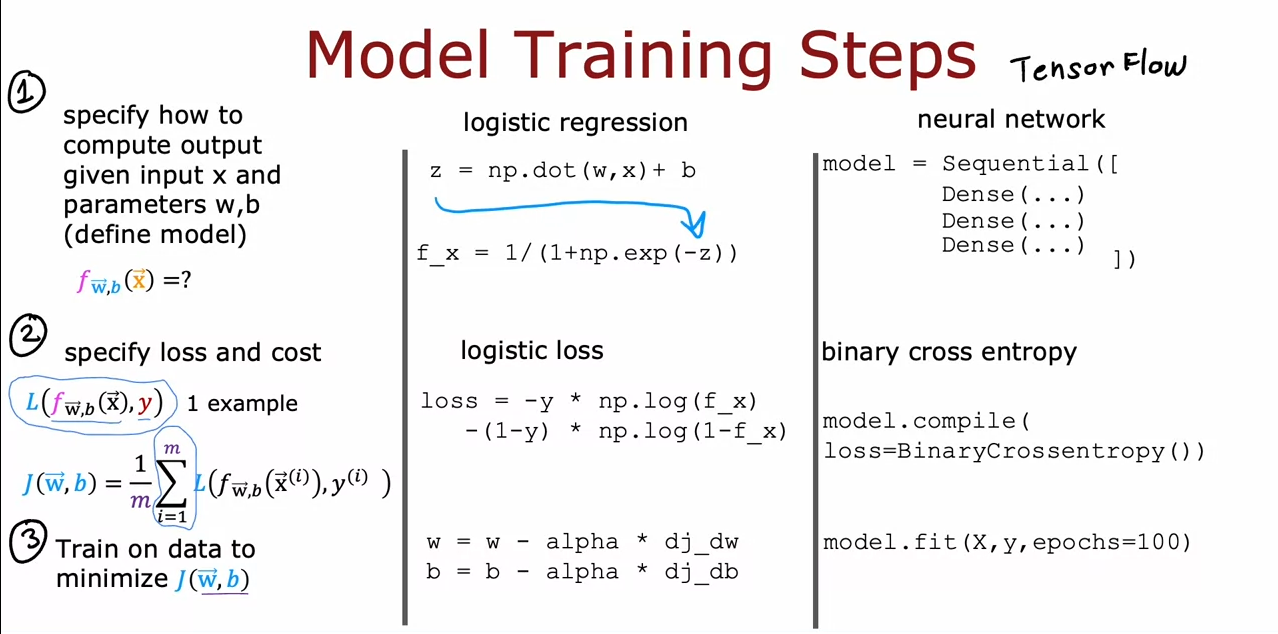

TensorFlow Implementation

Train a Neural Network in TensorFlow

Training Details

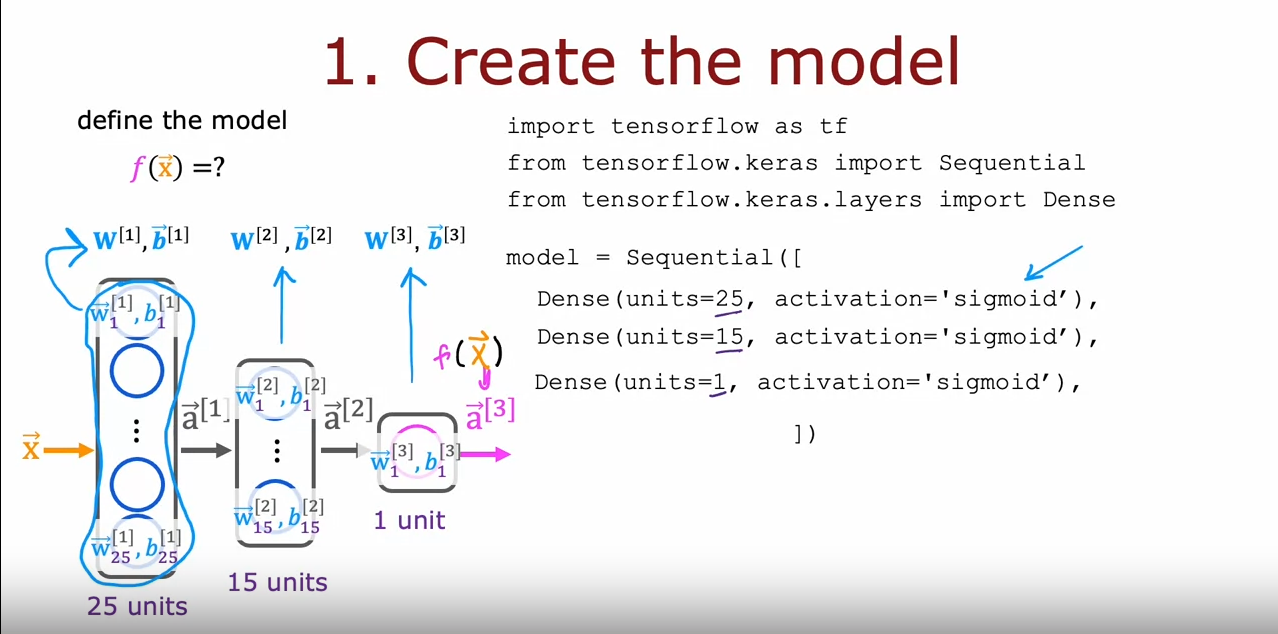

1. Create the model

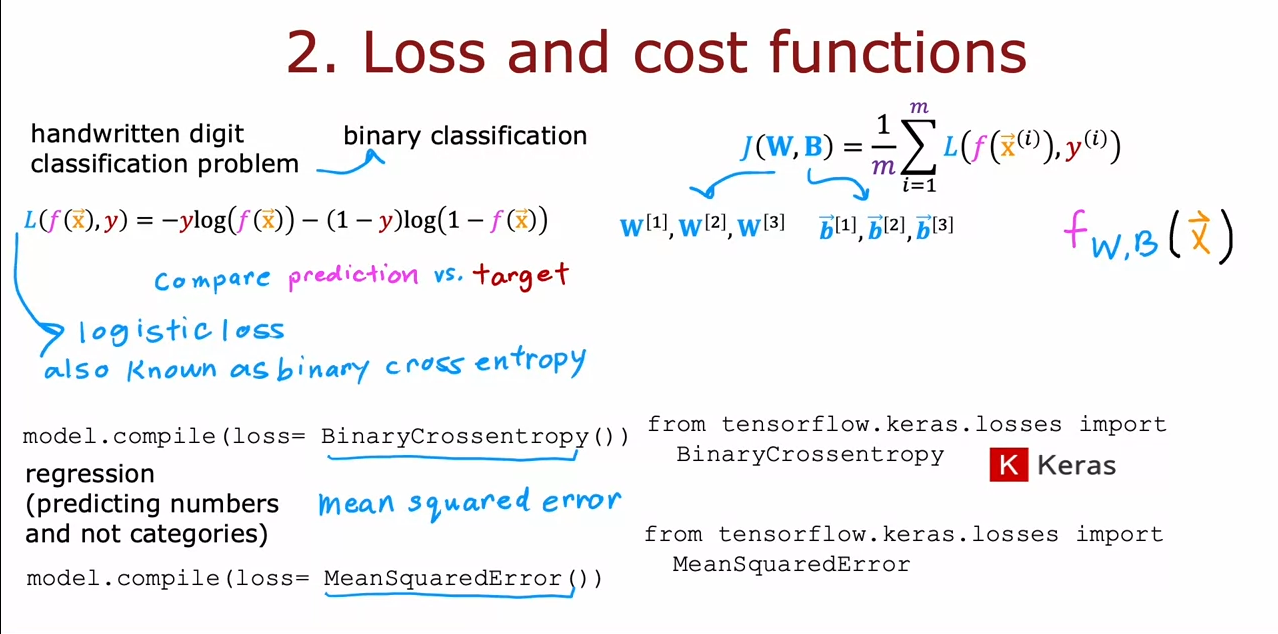

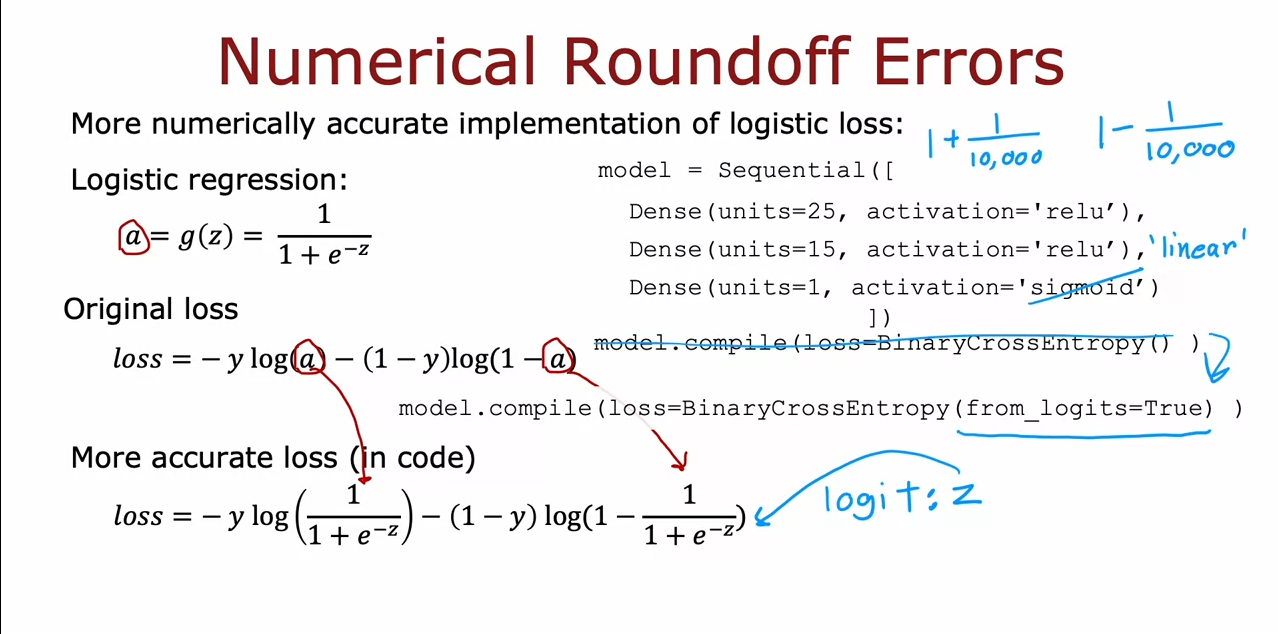

2. Loss and cost functions

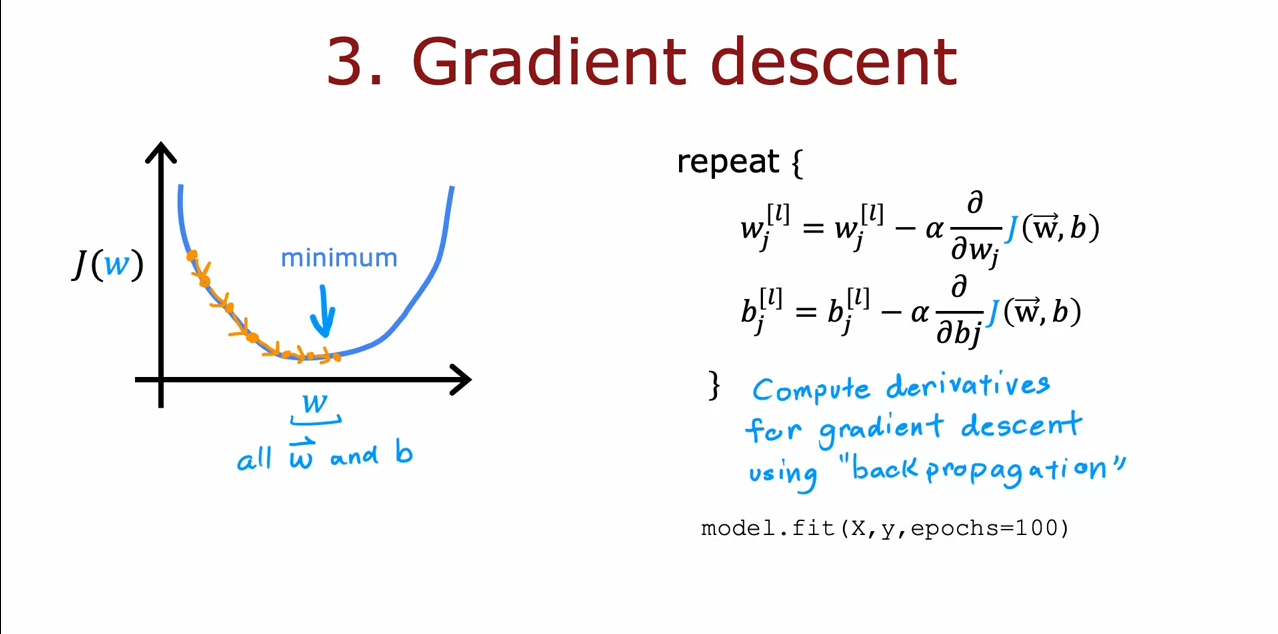

3. Gradient descent

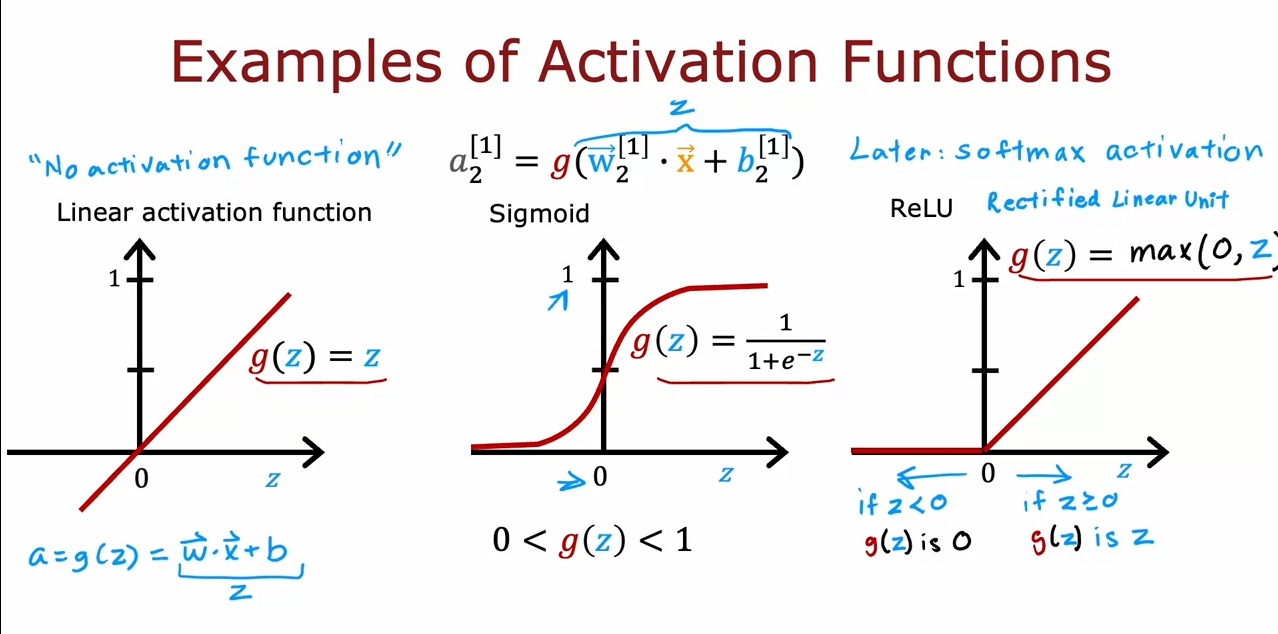

Alternatives to the sigmoid activation

ReLU (Rectified Linear Unit)

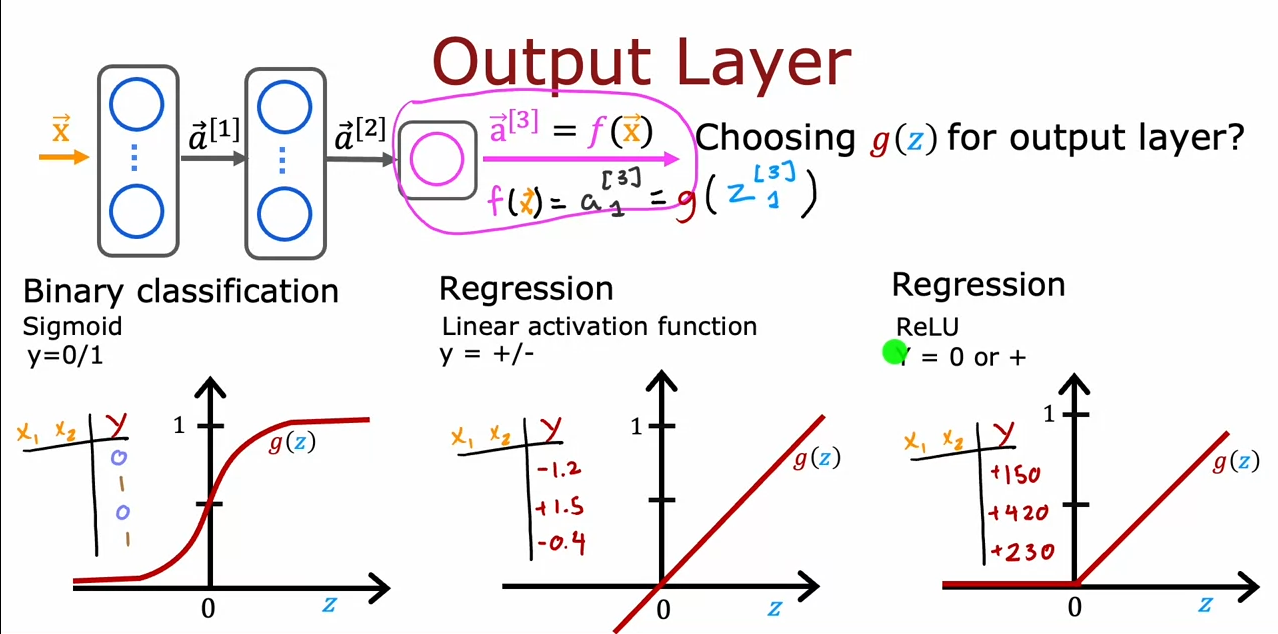

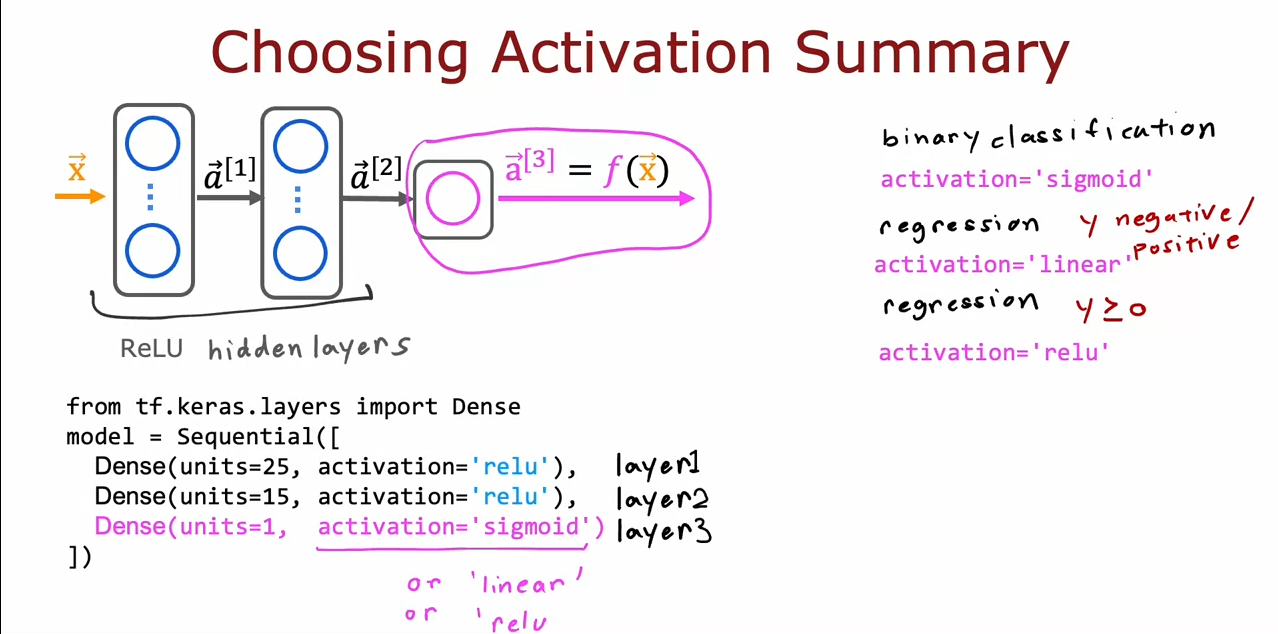

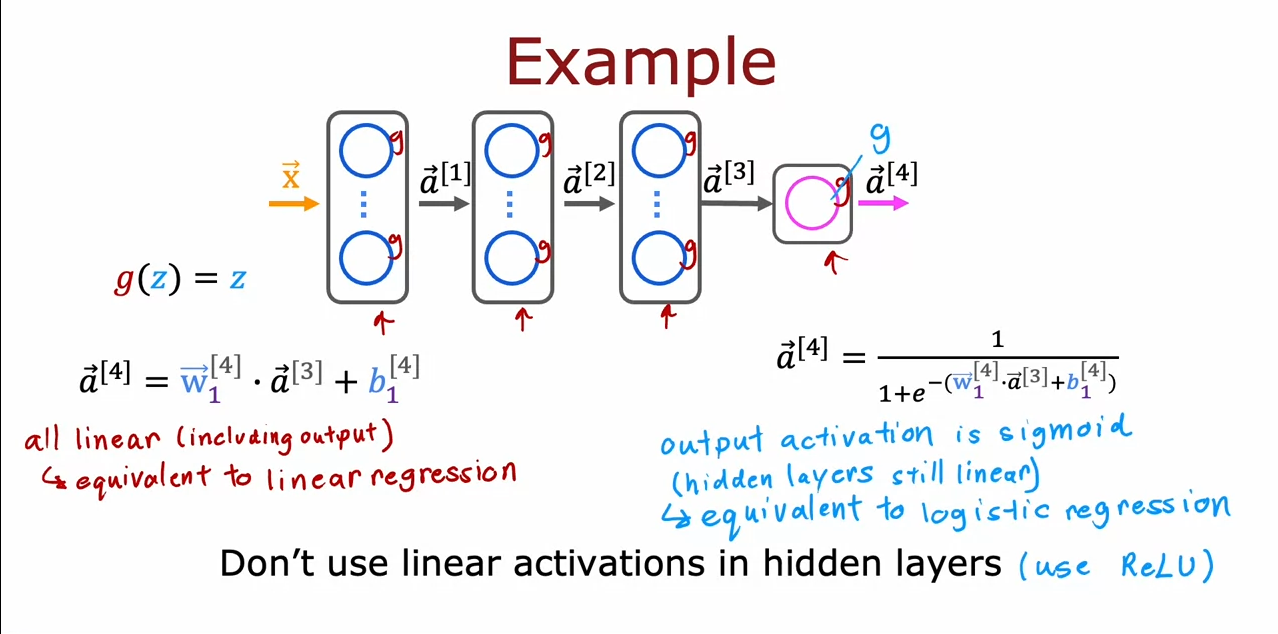

Choosing activation functions

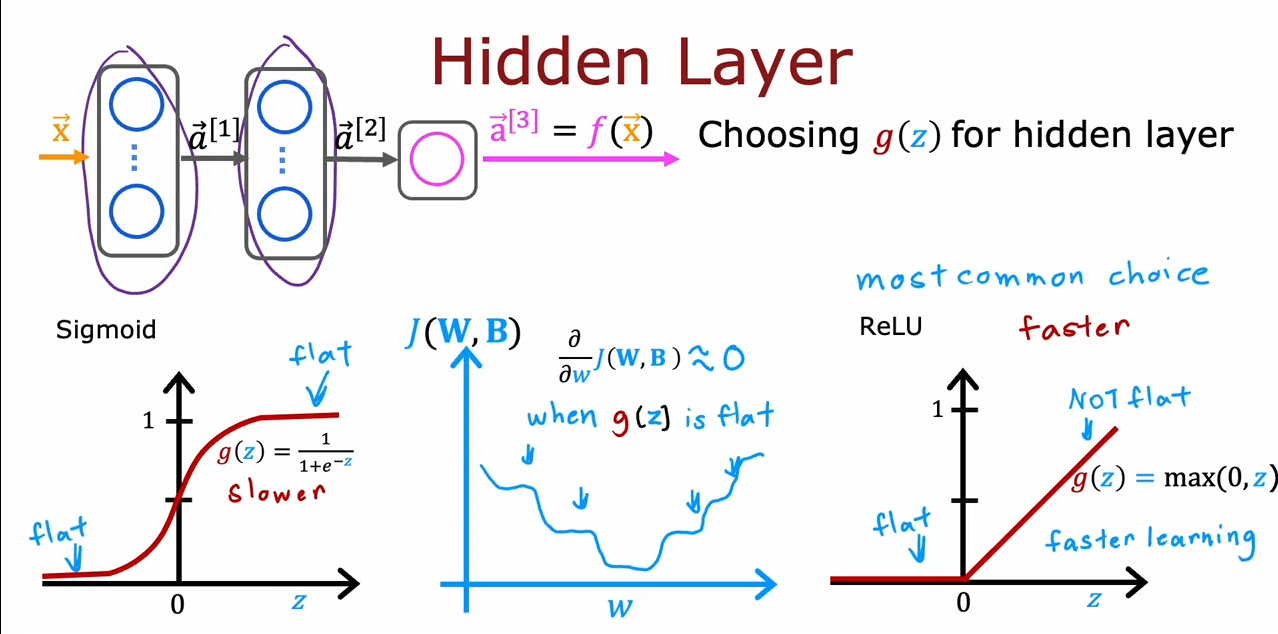

Hidden Layer

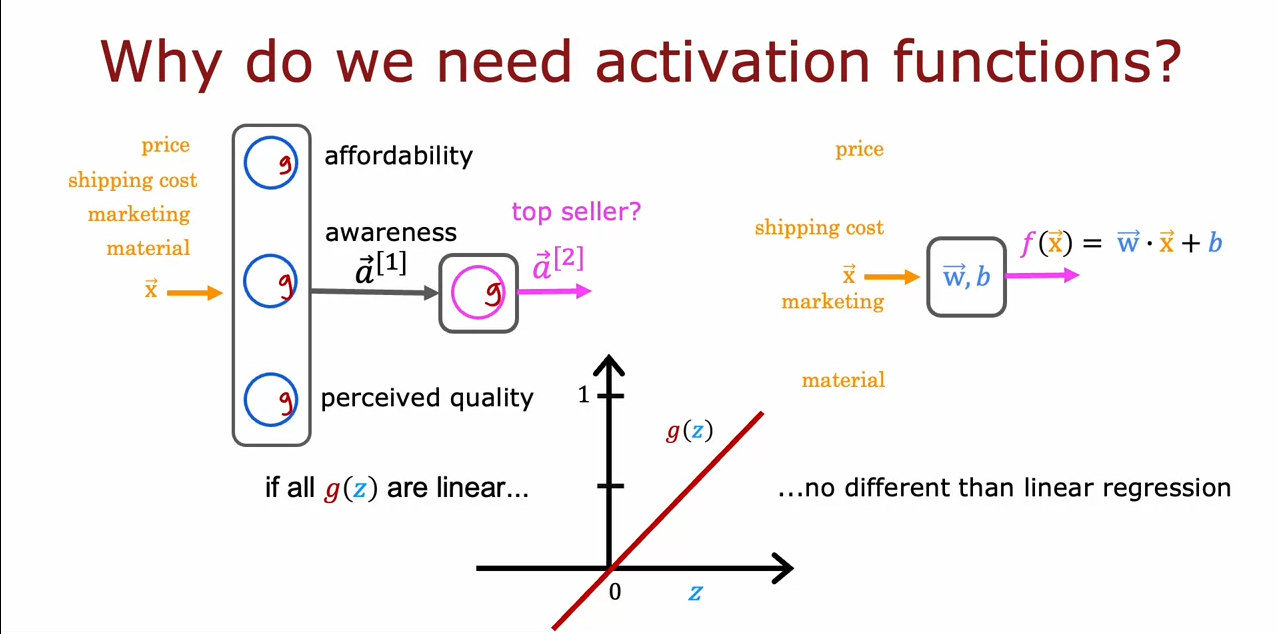

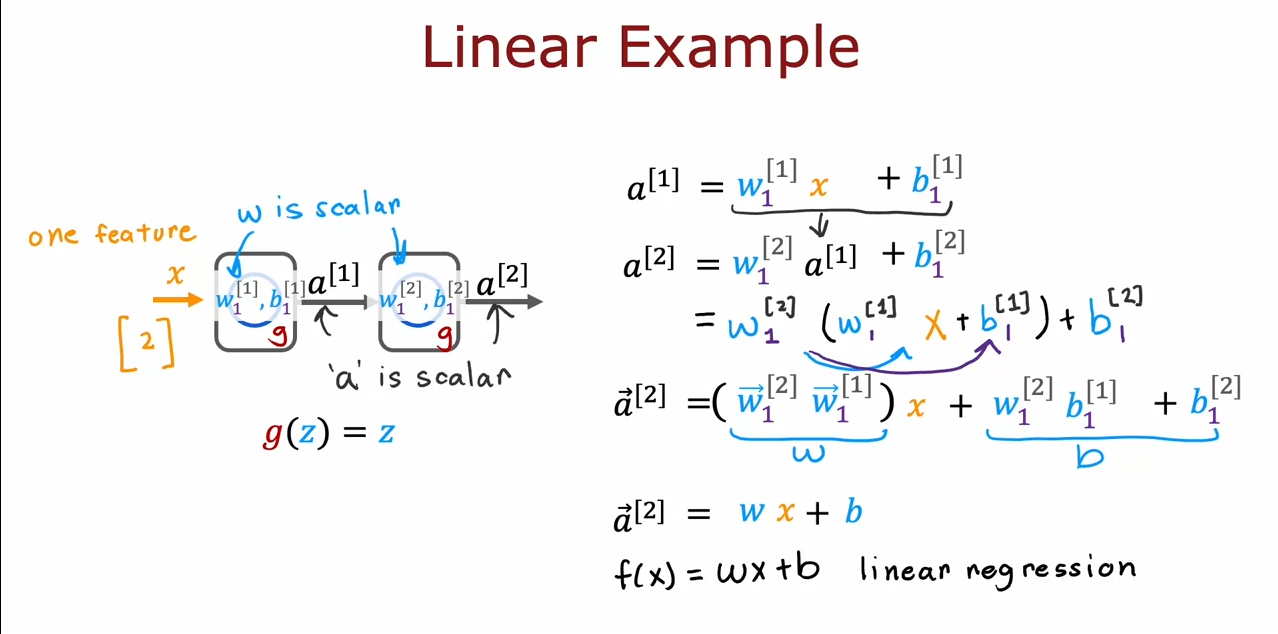

Why do we need activation functions?

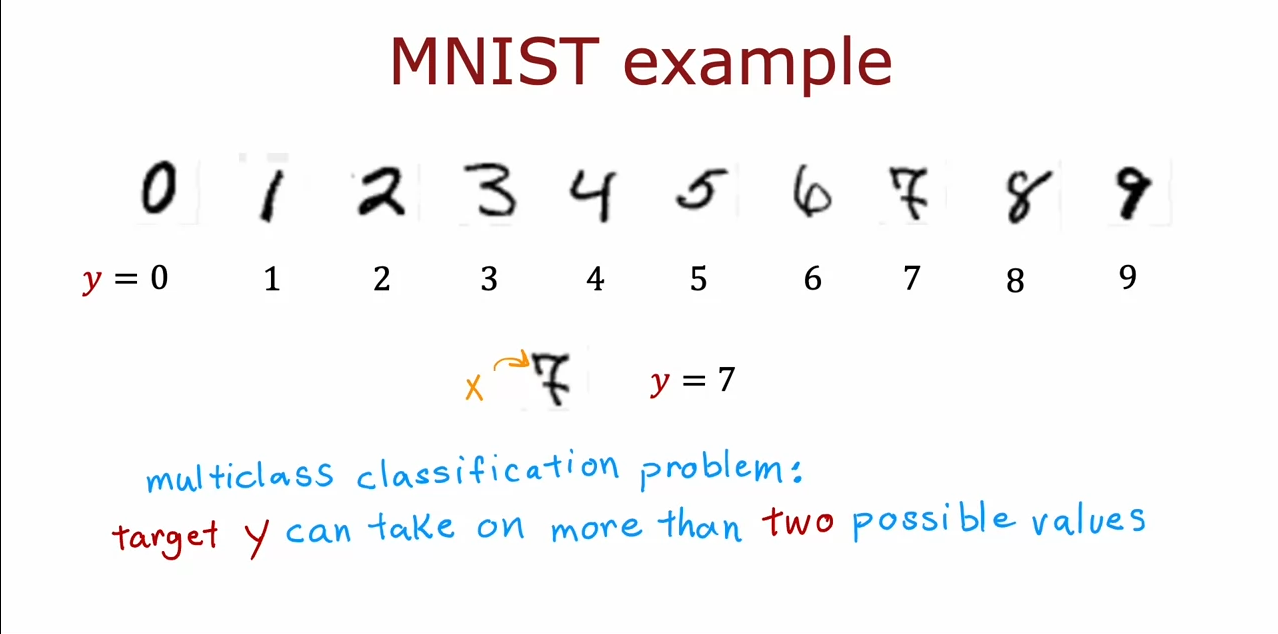

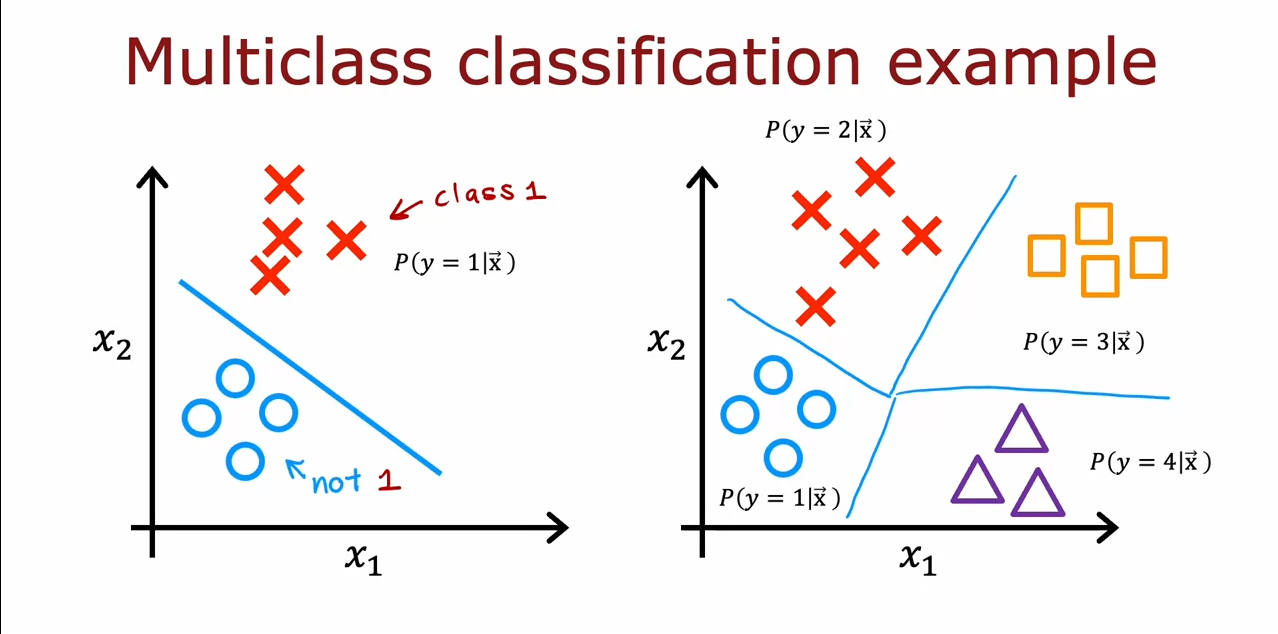

Multiclass

target y can take on more than two possible values

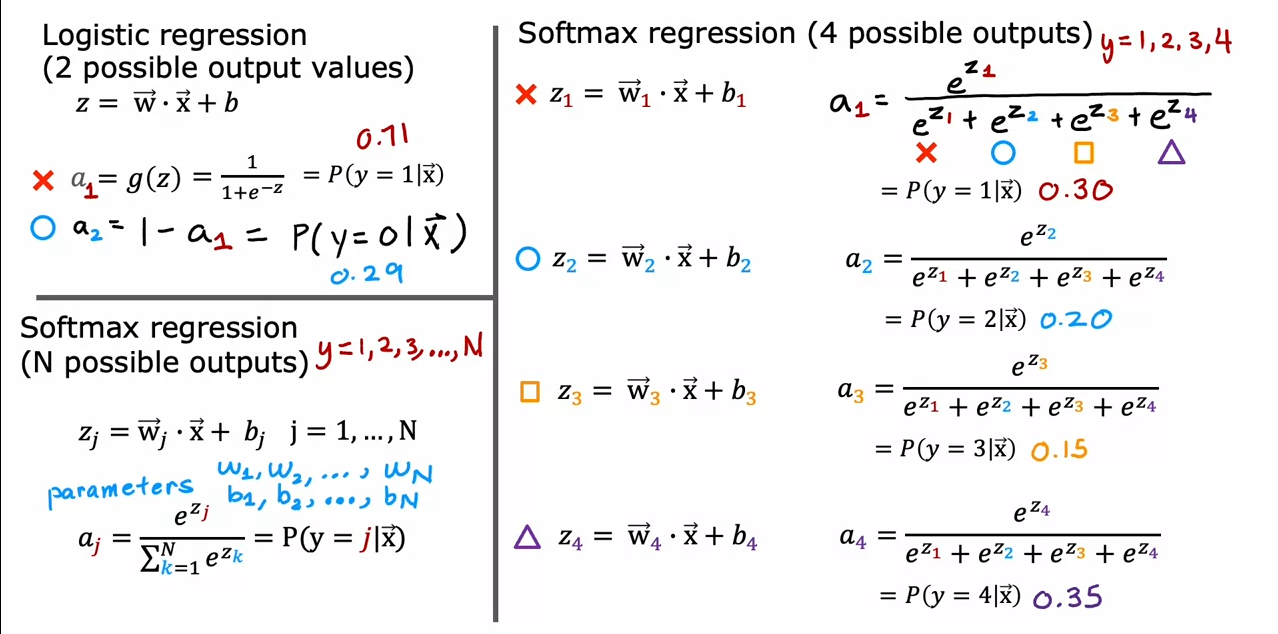

Softmax

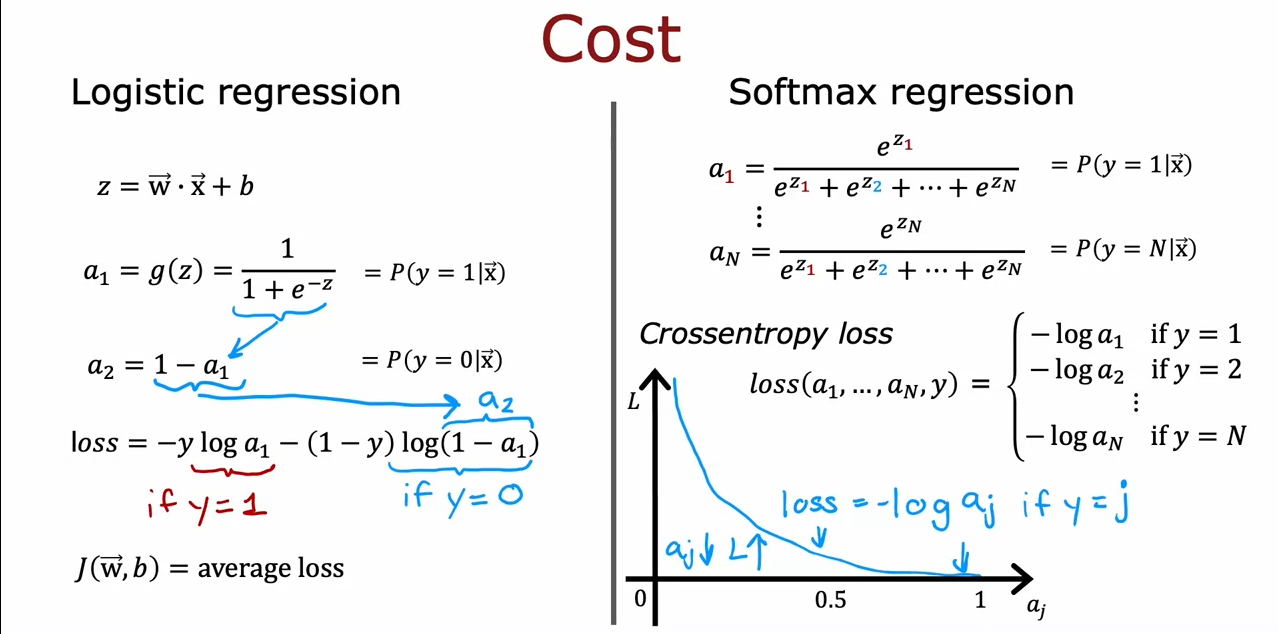

Cost

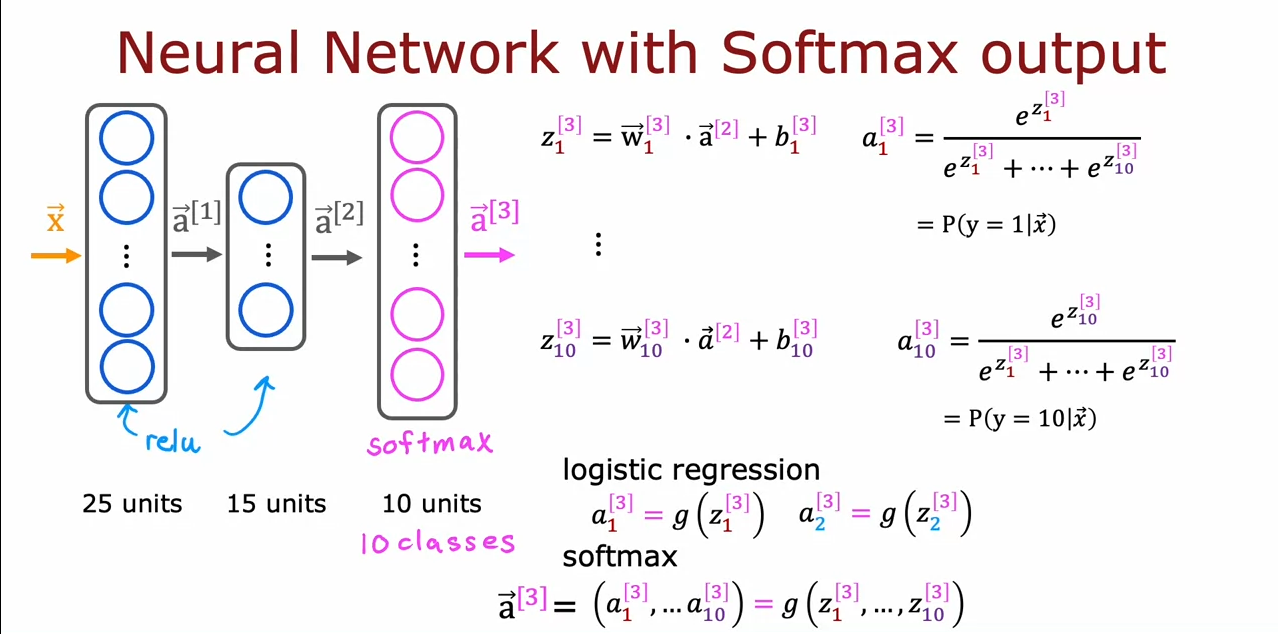

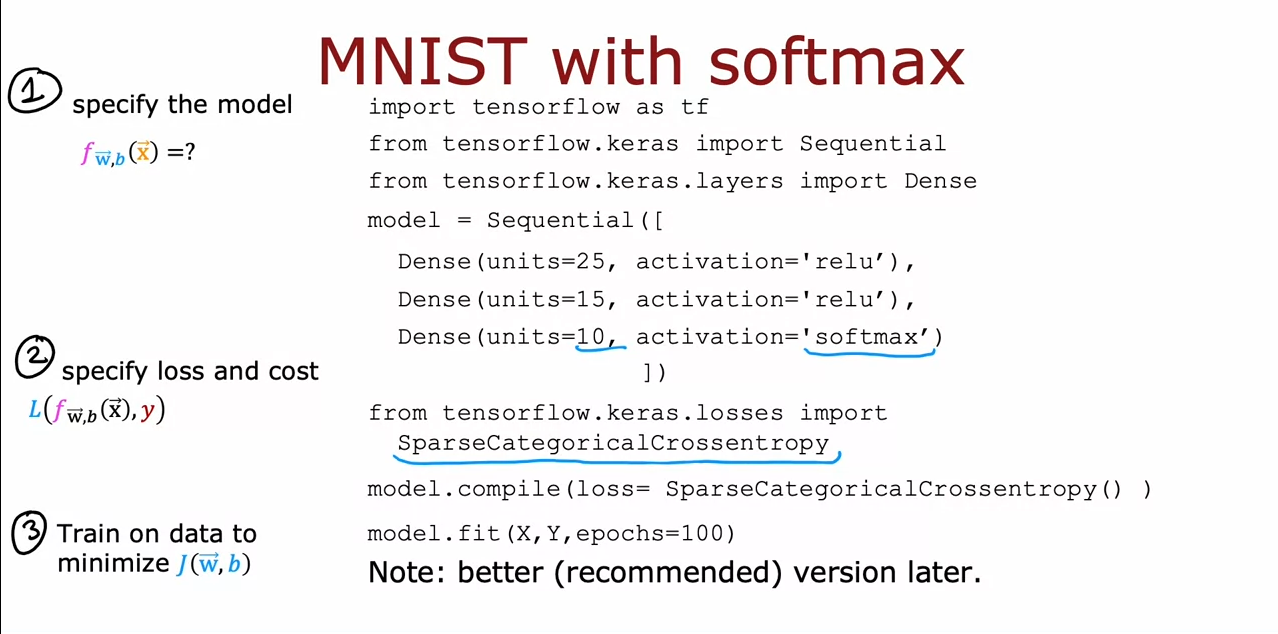

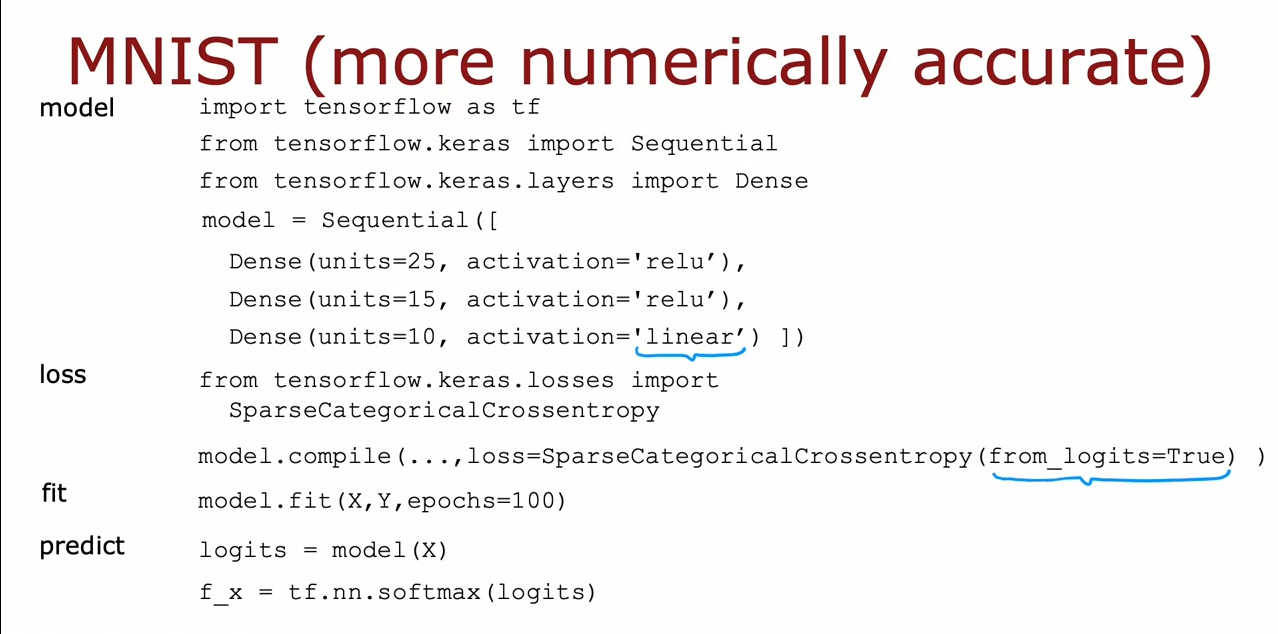

Neural network with softmax output

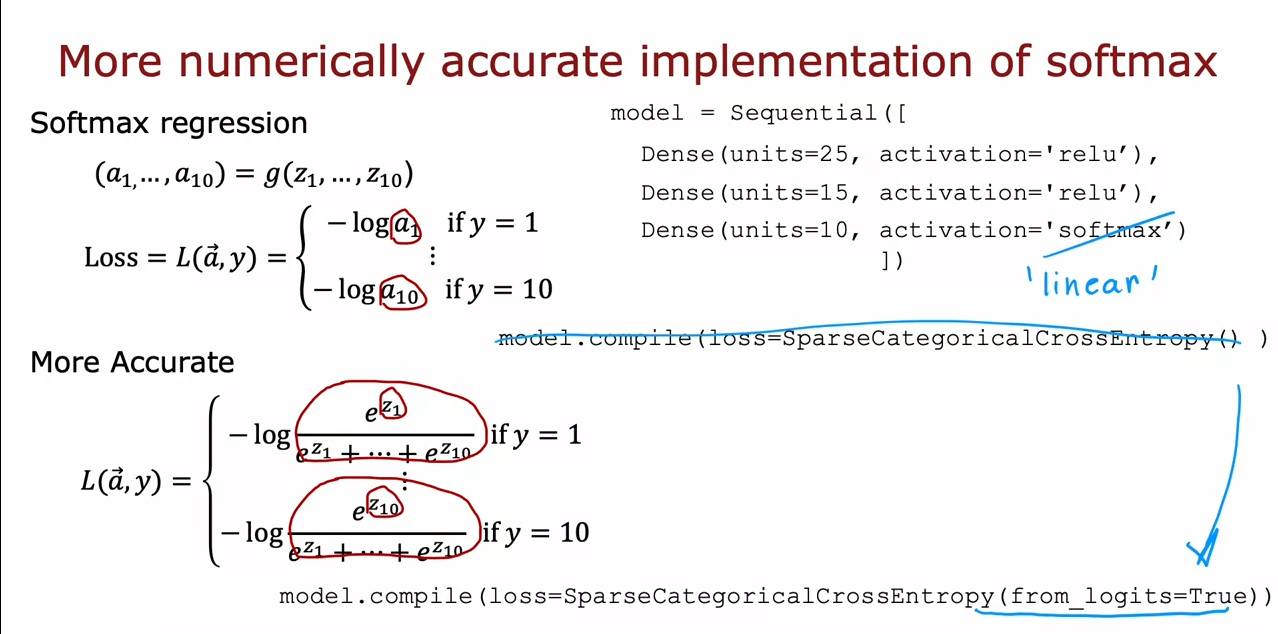

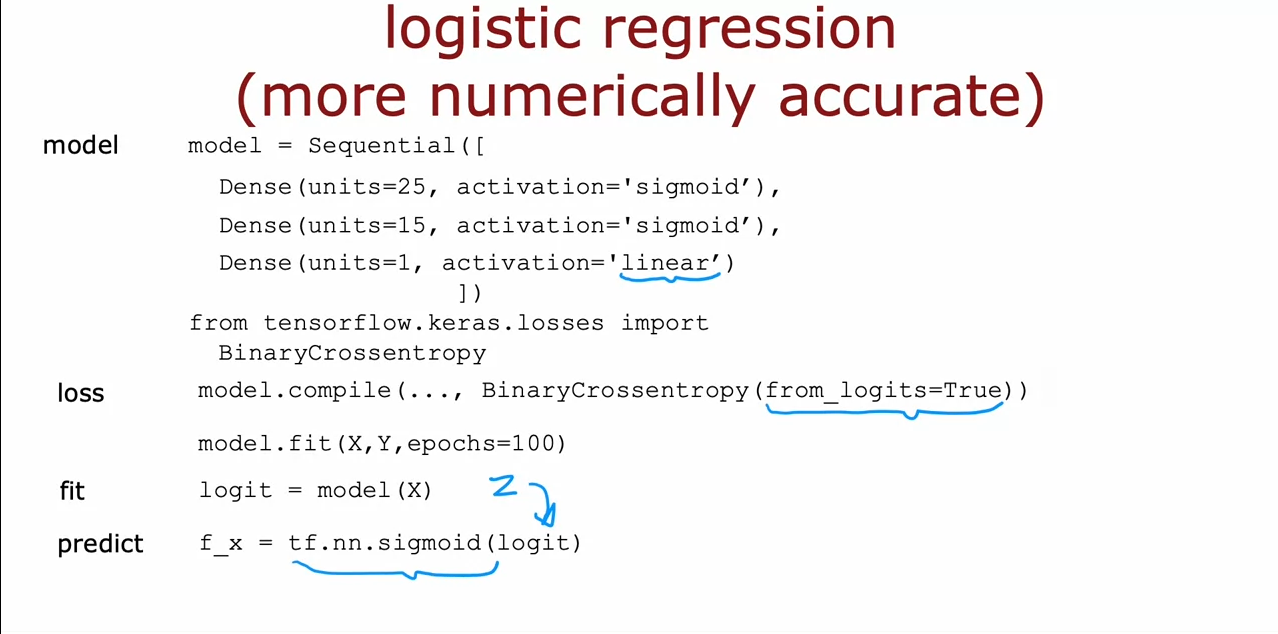

Improved implementation of softmax

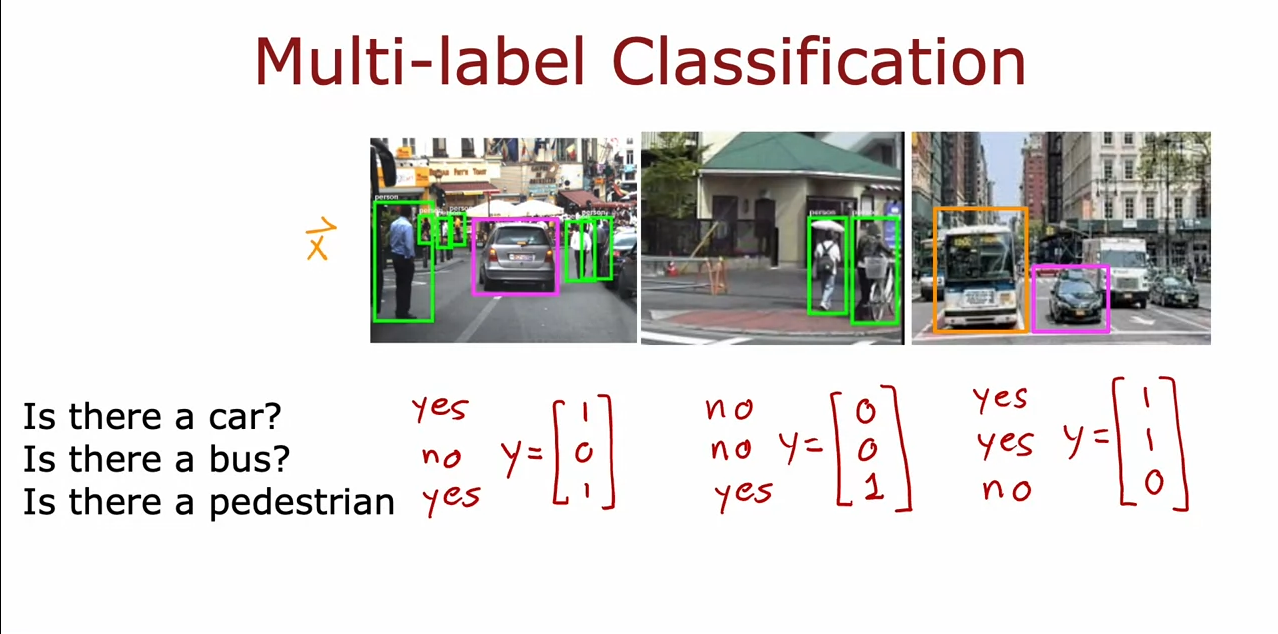

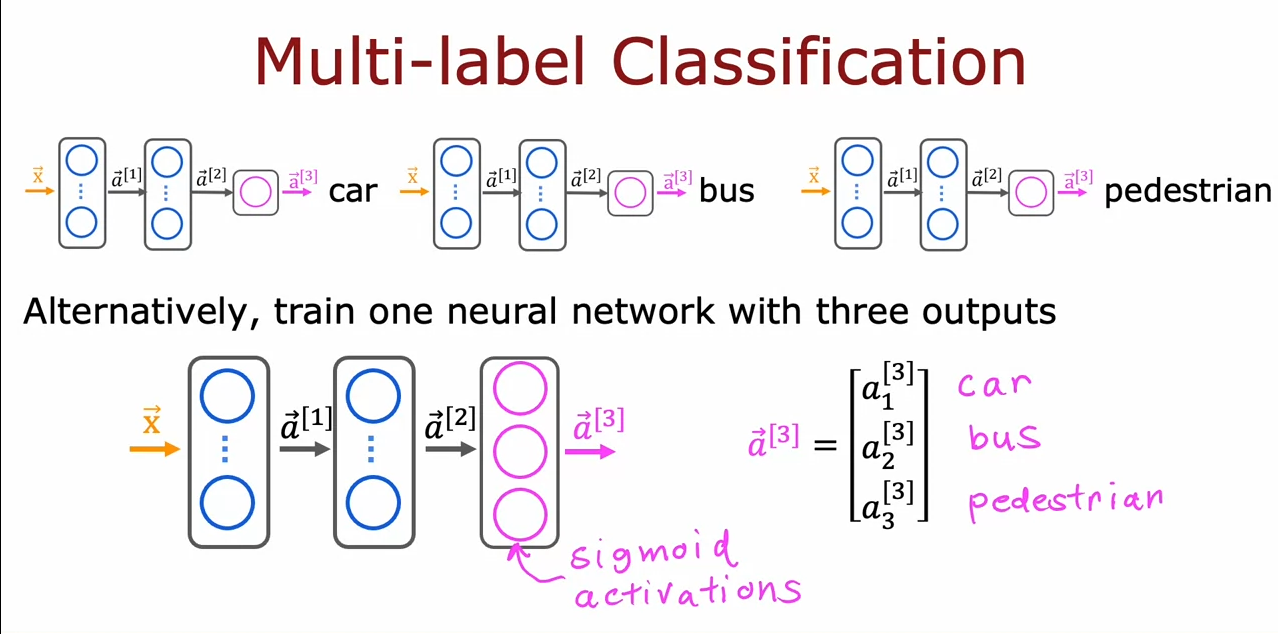

Classification with multiple outputs

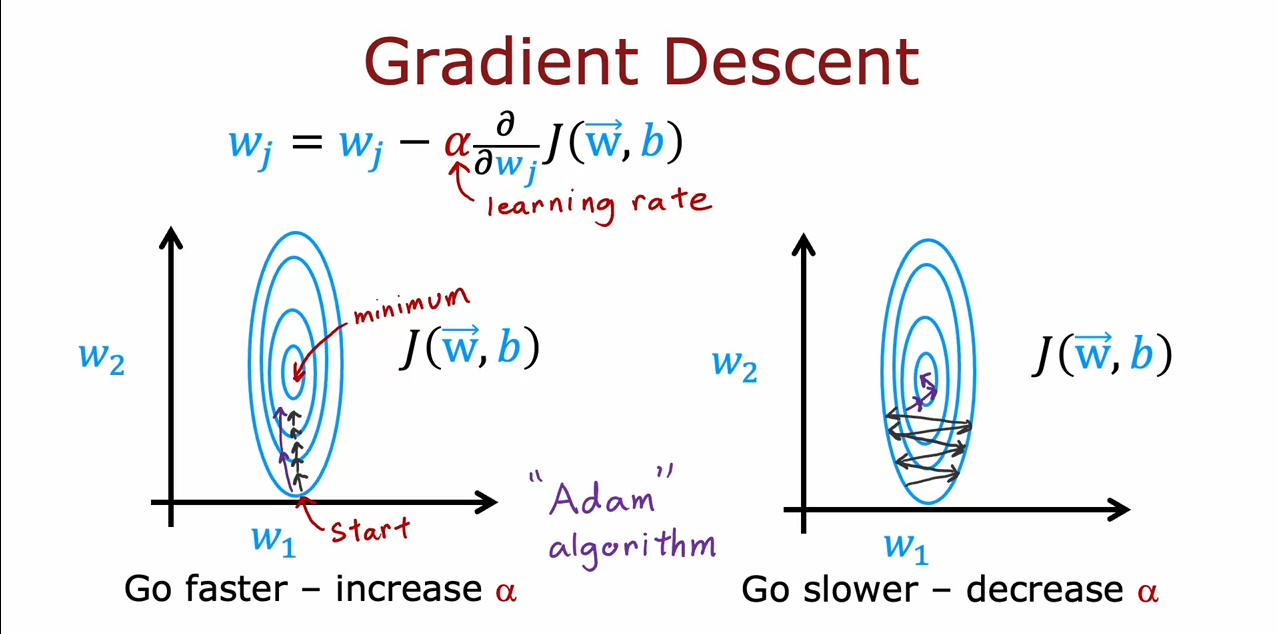

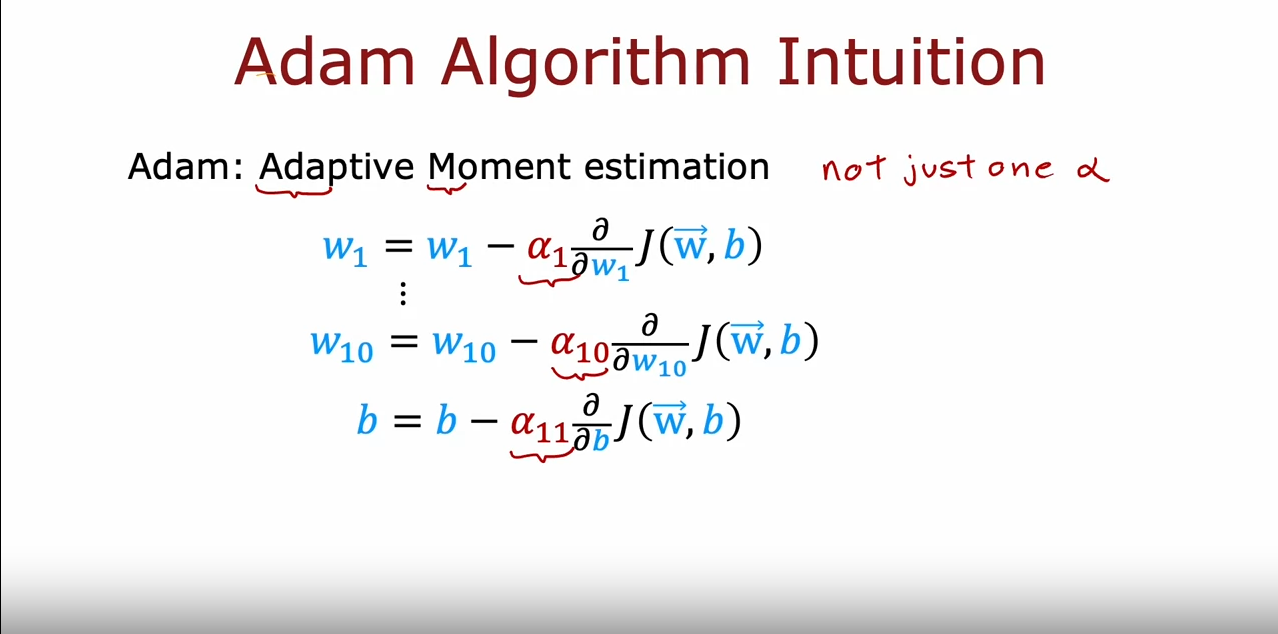

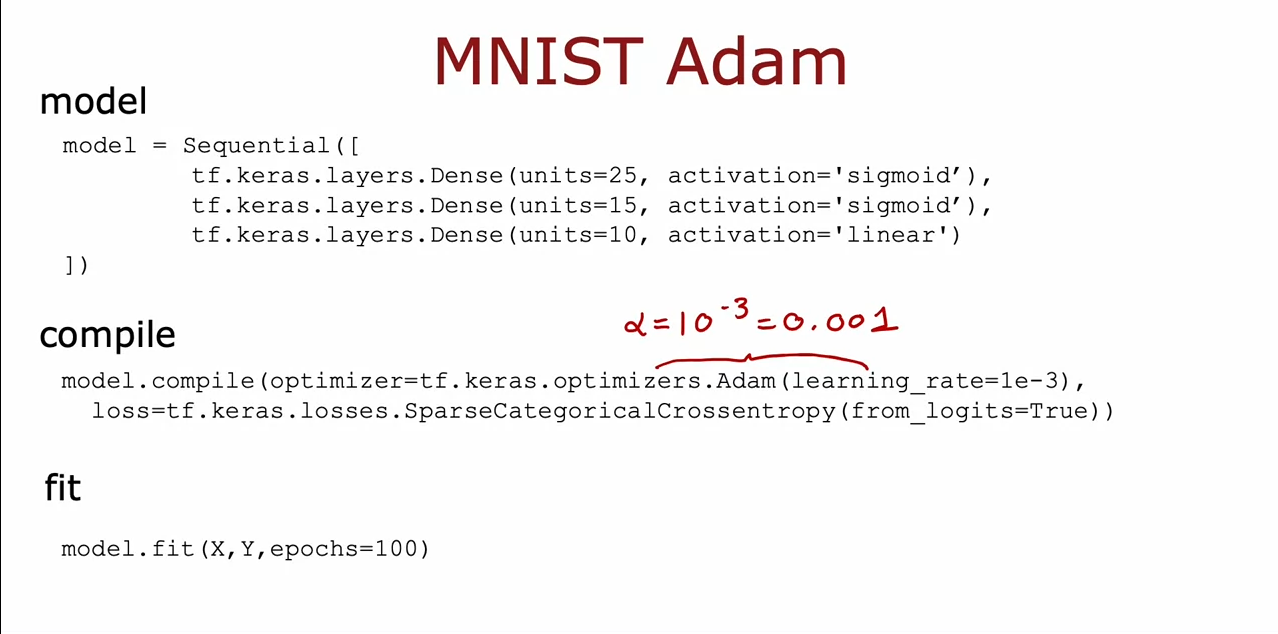

Advanced Optimization

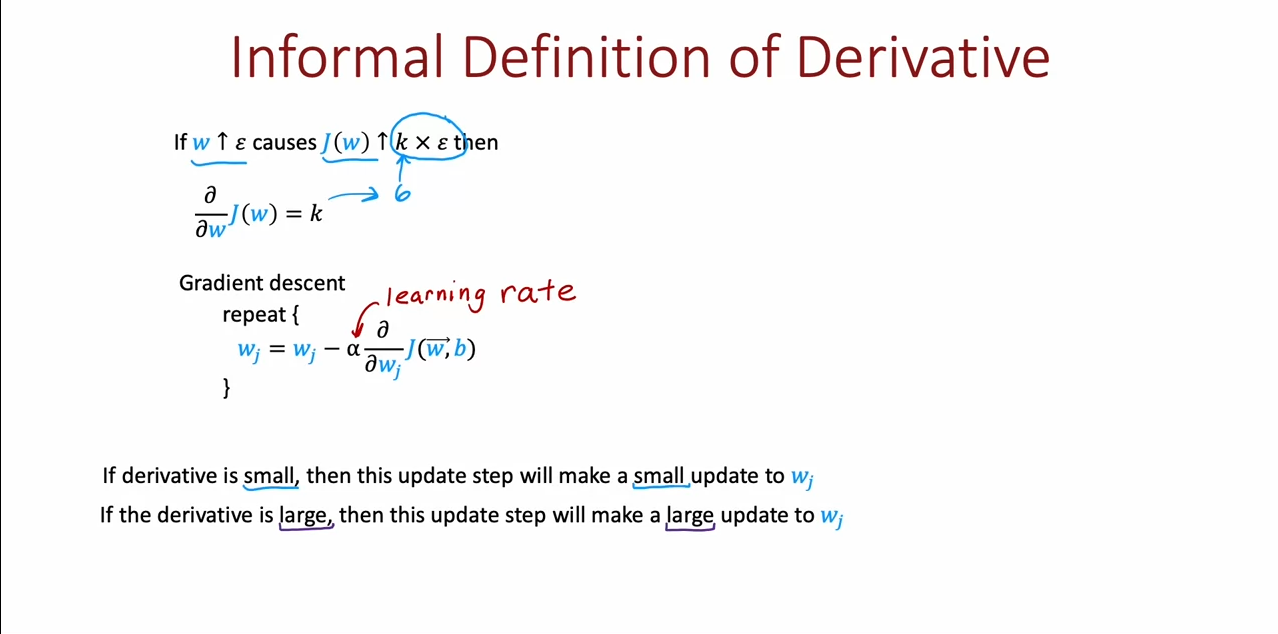

Gradient Descent

Adam Algorithm Intuition

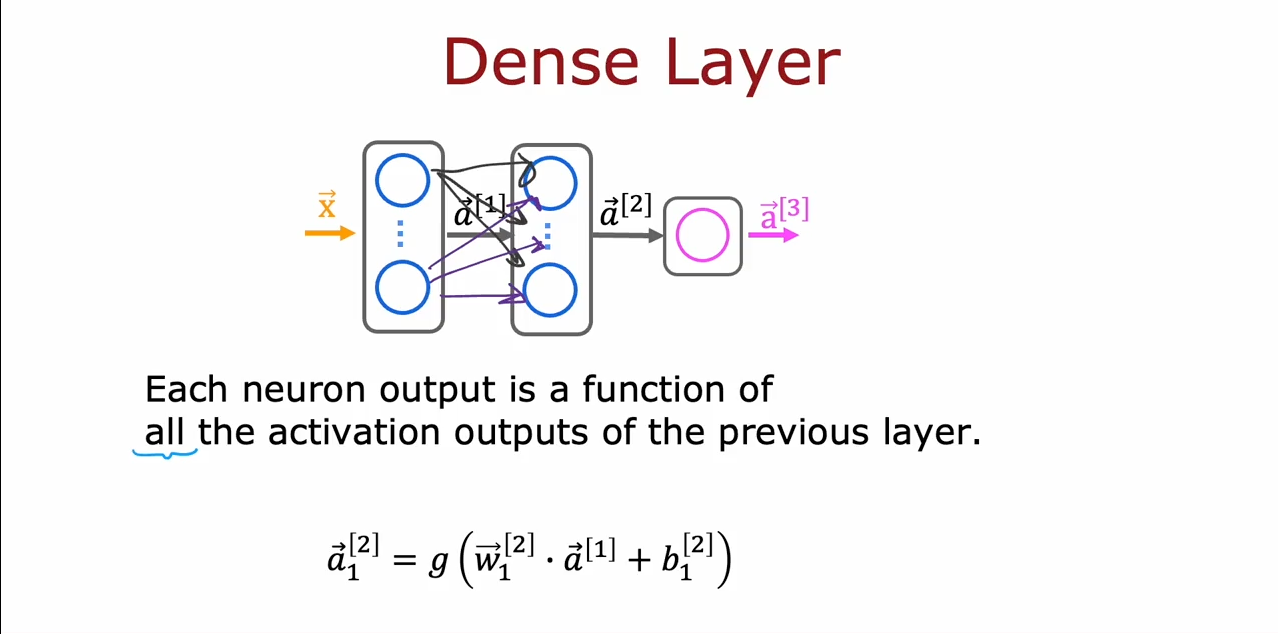

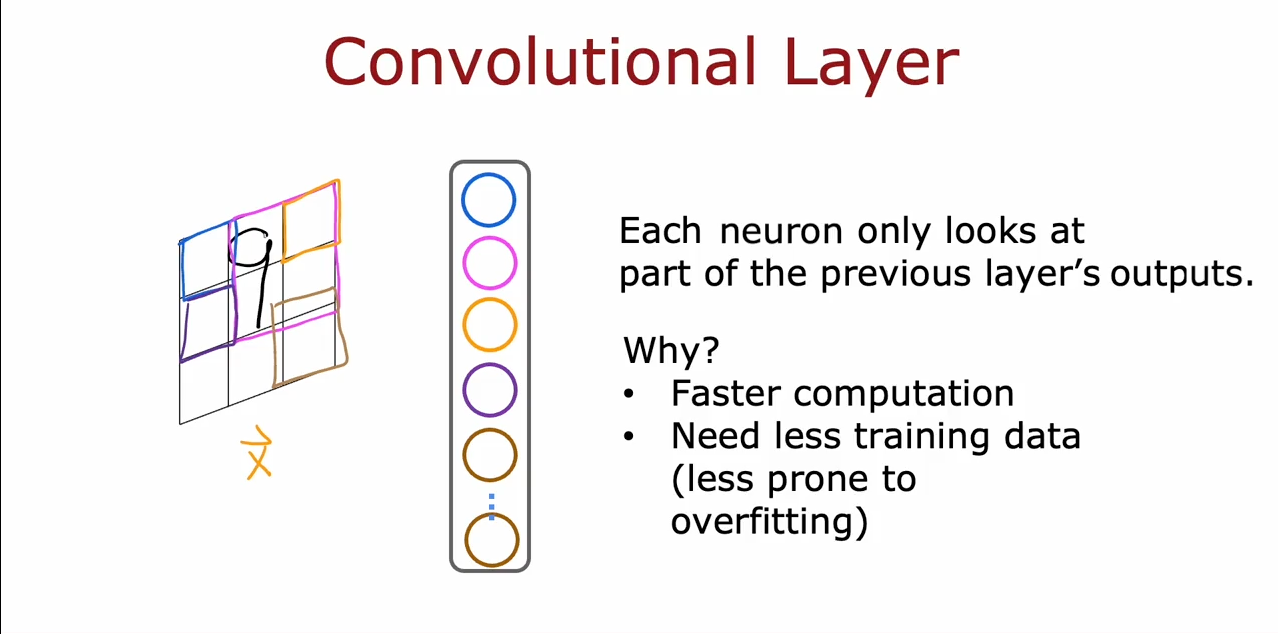

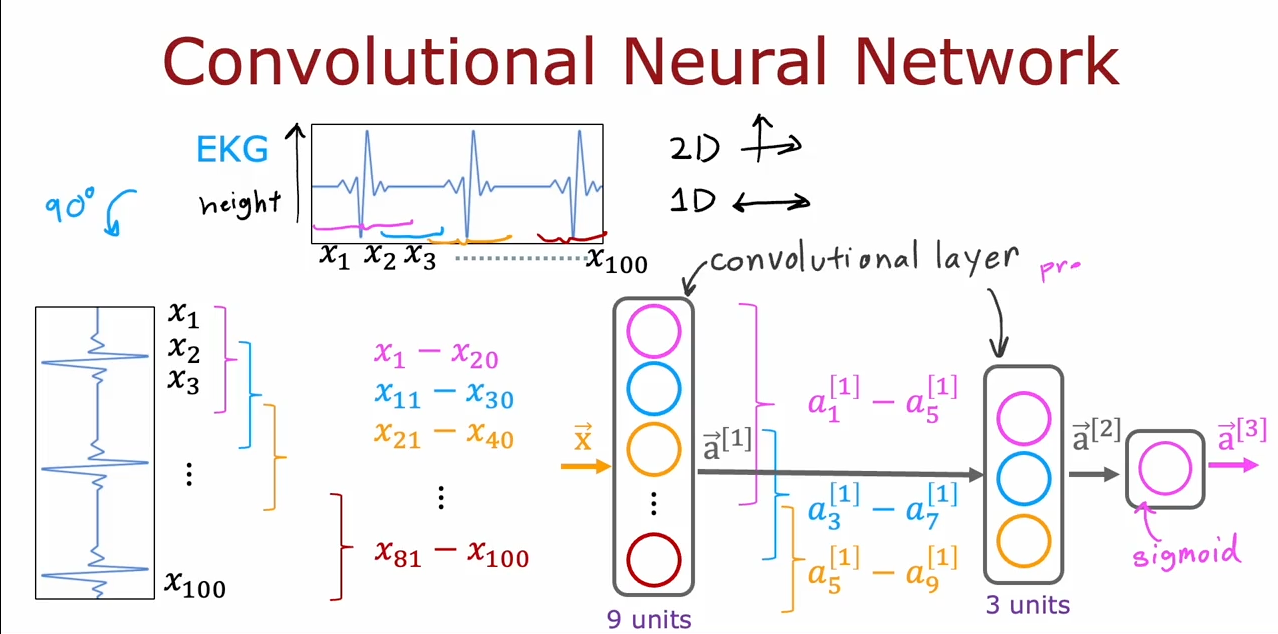

Additional Layer Types

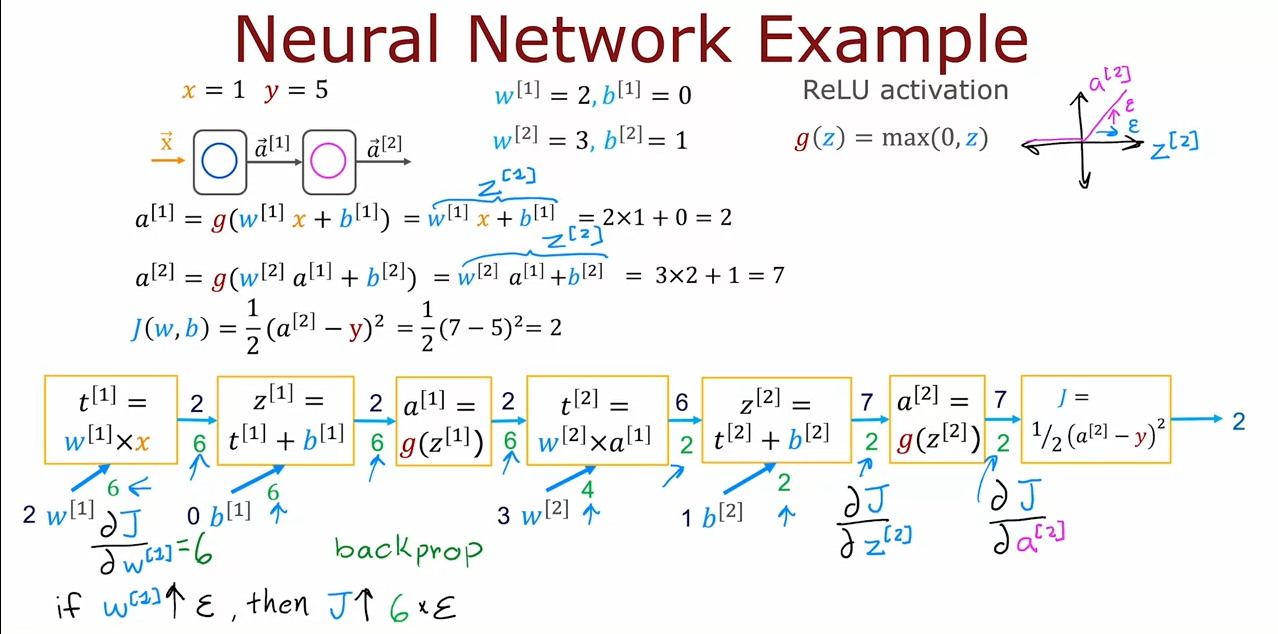

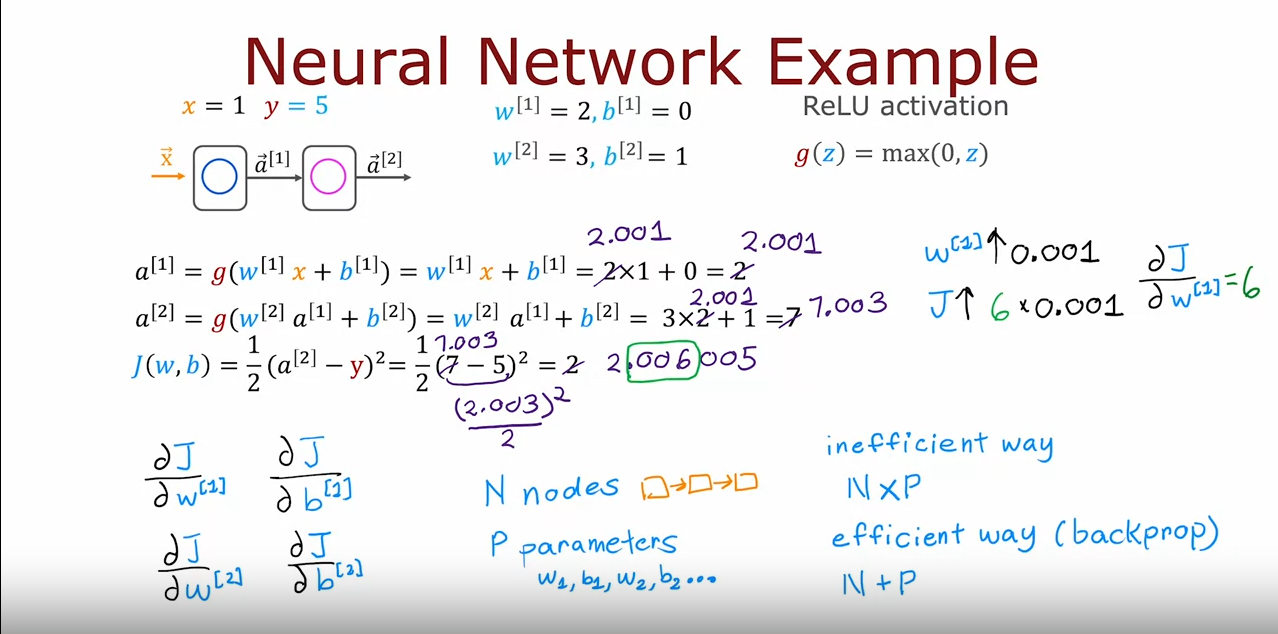

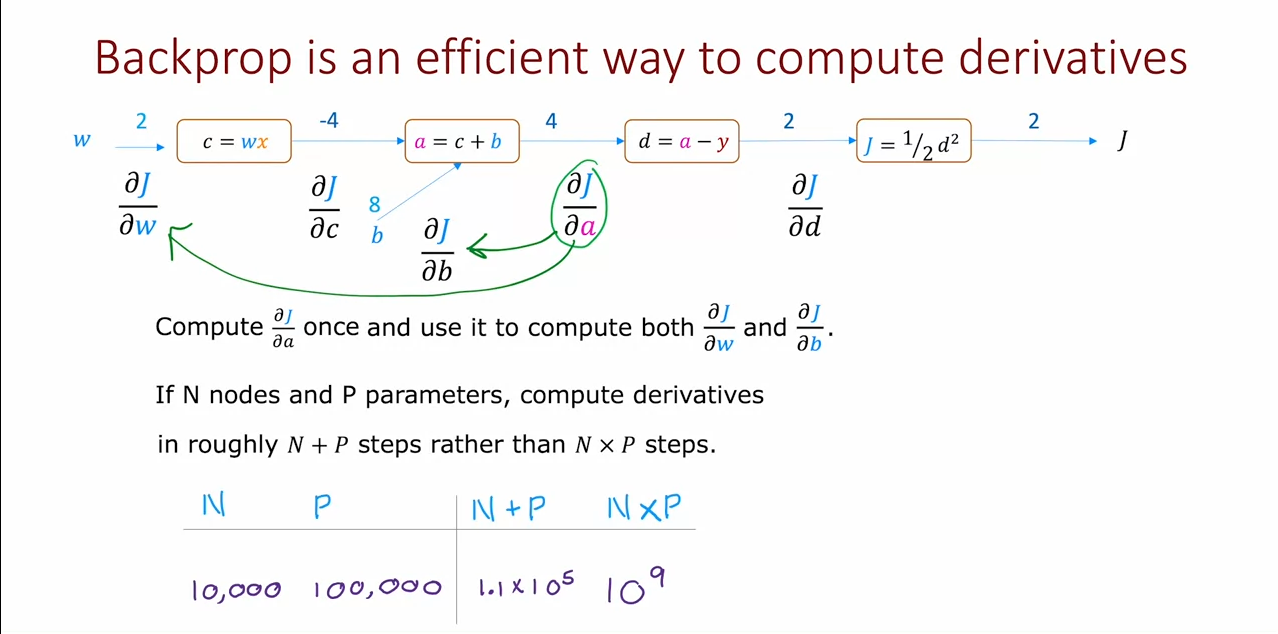

Back propagation

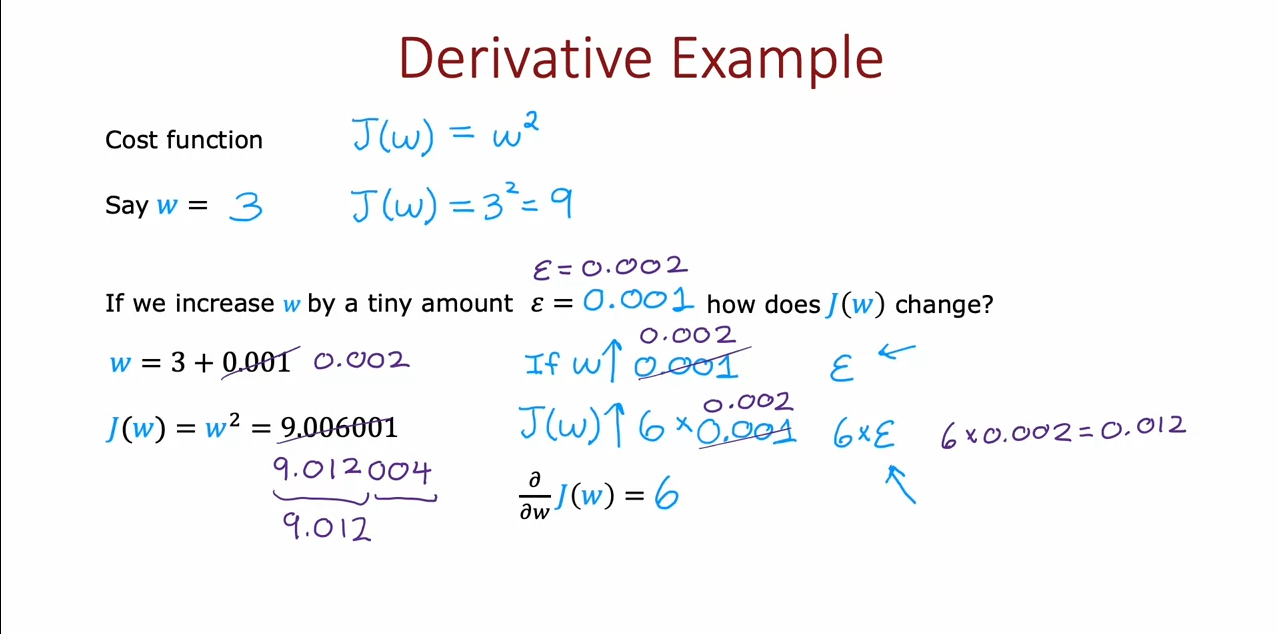

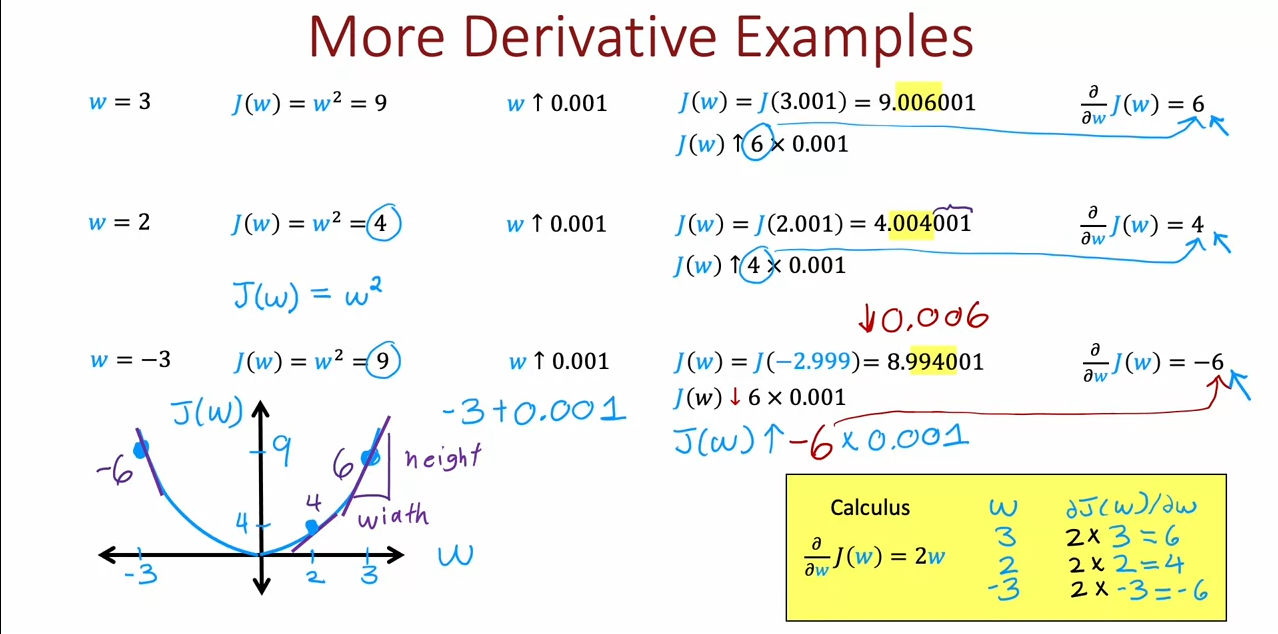

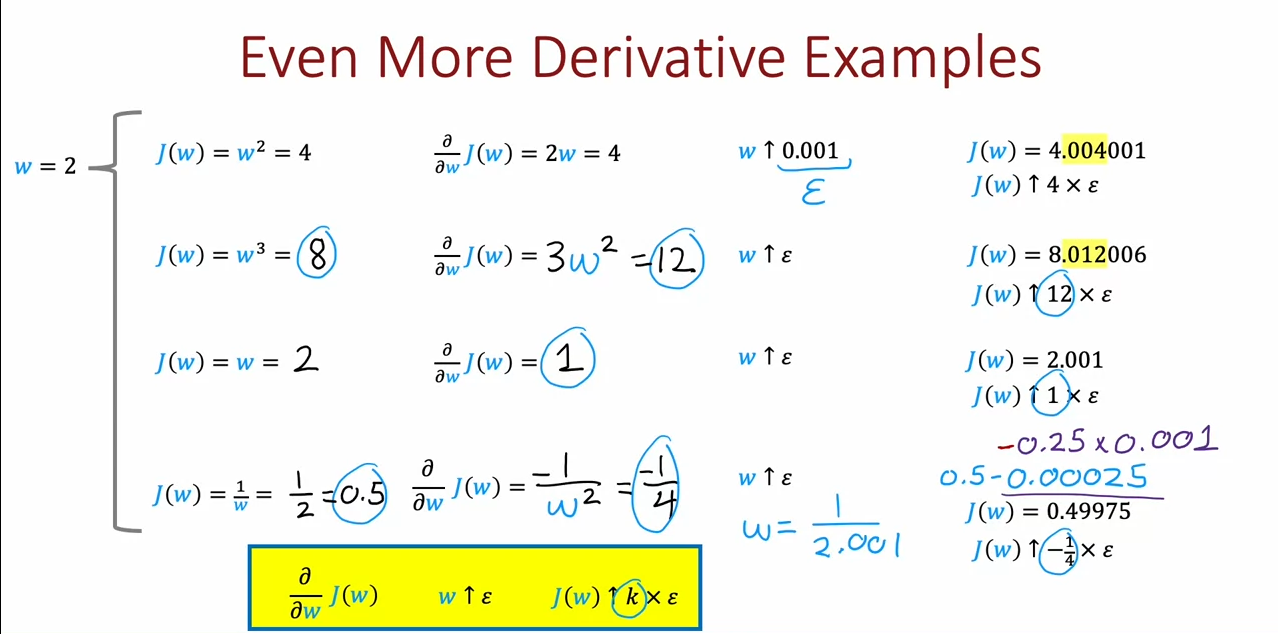

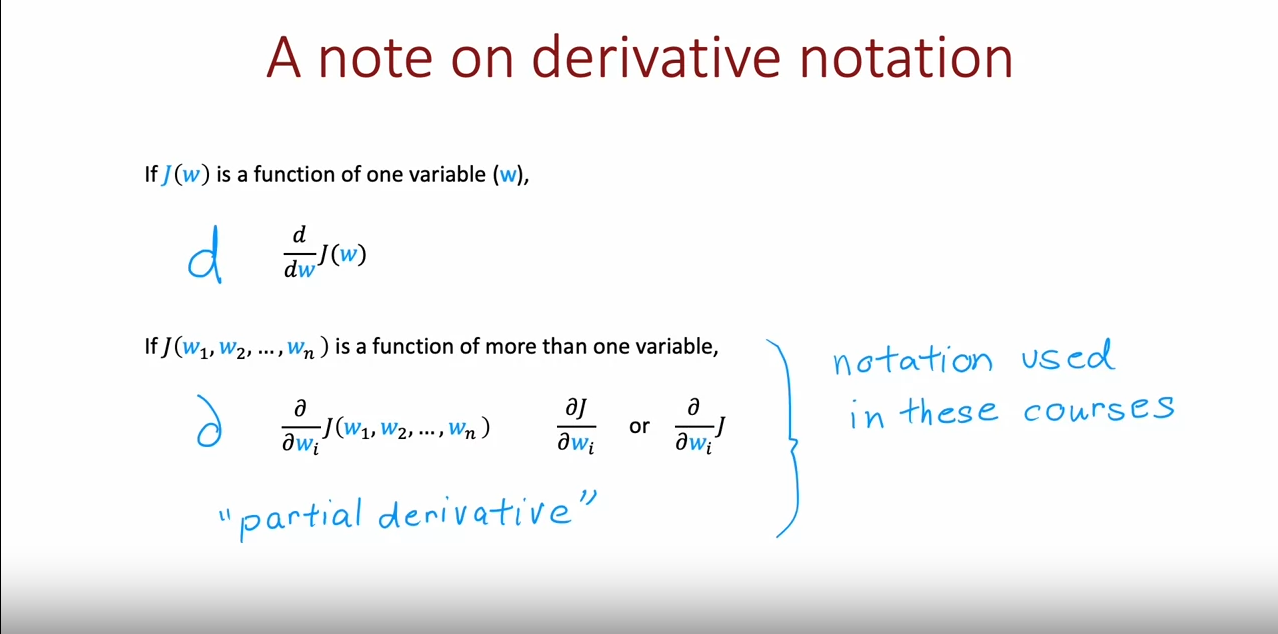

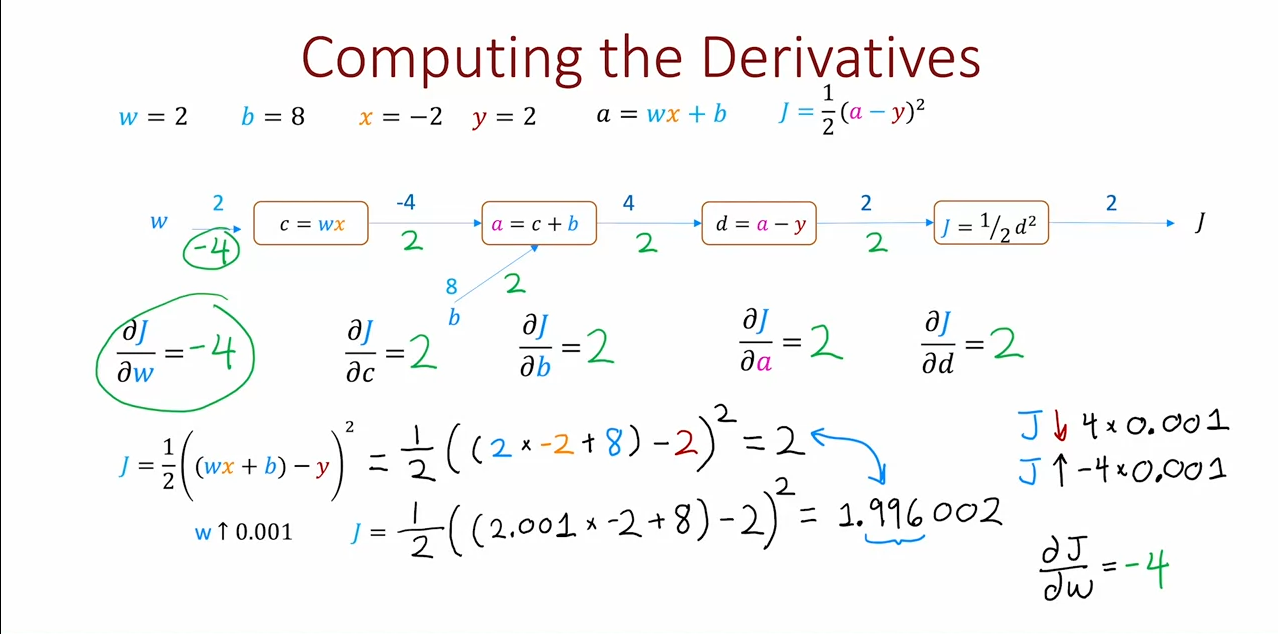

What is a derivative?

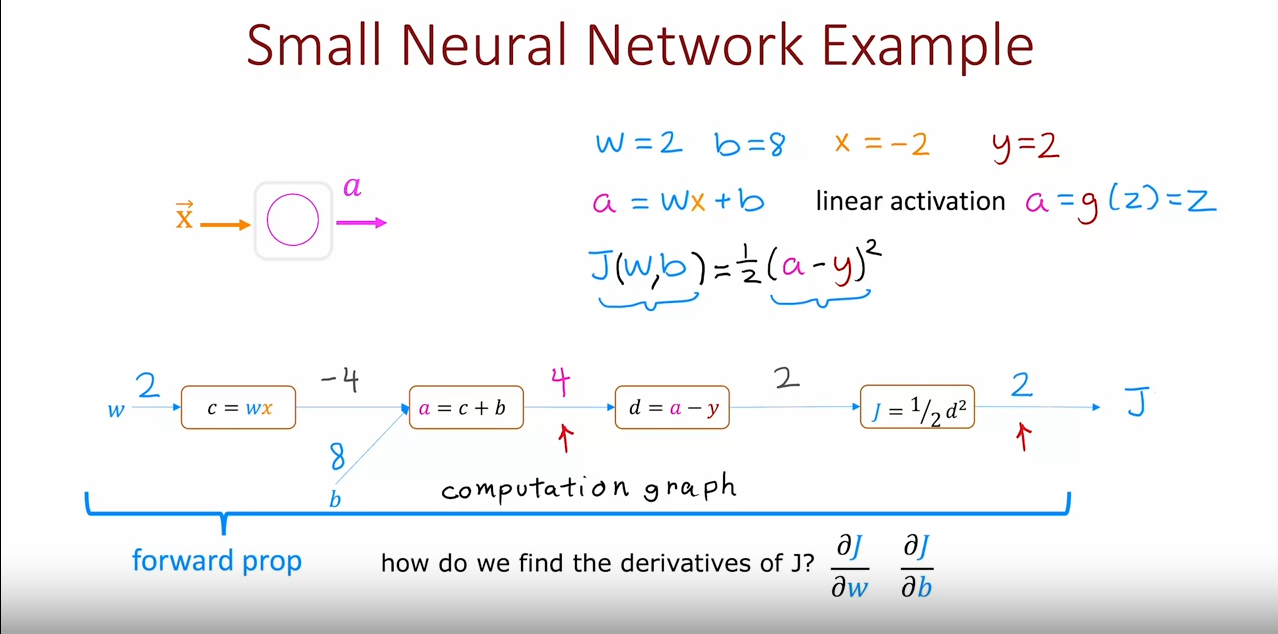

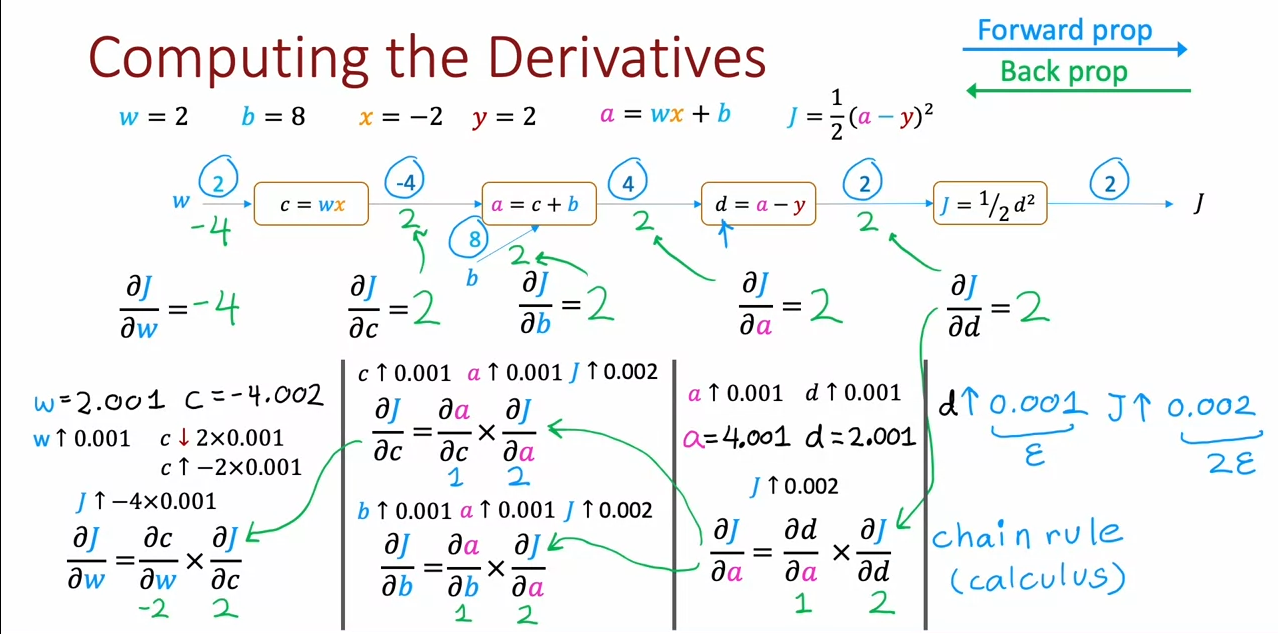

Computation graph

Larger neural network example